- Criss-cross algorithm

-

This article is about an algorithm for mathematical optimization. For the naming of chemicals, see crisscross method.

In mathematical optimization, the criss-cross algorithm denotes a family of algorithms for linear programming. Variants of the criss-cross algorithm also solve more general problems with linear inequality constraints and nonlinear objective functions; there are criss-cross algorithms for linear-fractional programming problems,[1][2] quadratic-programming problems, and linear complementarity problems.[3]

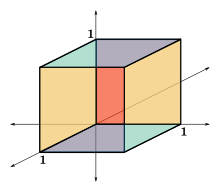

Like the simplex algorithm of George B. Dantzig, the criss-cross algorithm is not a polynomial-time algorithm for linear programming. Both algorithms visit all 2D corners of a (perturbed) cube in dimension D, the Klee–Minty cube (after Victor Klee and George J. Minty), in the worst case.[4][5] However, when it is started at a random corner, the criss-cross algorithm on average visits only D additional corners.[6][7][8] Thus, for the three-dimensional cube, the algorithm visits all 8 corners in the worst case and exactly 3 additional corners on average.

Contents

History

The criss-cross algorithm was published independently by Tamás Terlaky[9] and by Zhe-Min Wang;[10] related algorithms appeared in unpublished reports by other authors.[3]

Comparison with the simplex algorithm for linear optimization

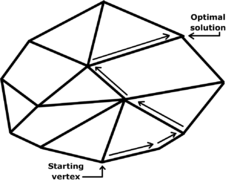

In its second phase, the simplex algorithm crawls along the edges of the polytope until it finally reaches an optimum vertex. The criss-cross algorithm considers bases that are not associated with vertices, so that some iterates can be in the interior of the feasible region, like interior-point algorithms; the criss-cross algorithm can also have infeasible iterates outside the feasible region.

In its second phase, the simplex algorithm crawls along the edges of the polytope until it finally reaches an optimum vertex. The criss-cross algorithm considers bases that are not associated with vertices, so that some iterates can be in the interior of the feasible region, like interior-point algorithms; the criss-cross algorithm can also have infeasible iterates outside the feasible region.

In linear programming, the criss-cross algorithm pivots between a sequence of bases but differs from the simplex algorithm of George Dantzig. The simplex algorithm first finds a (primal-) feasible basis by solving a "phase-one problem"; in "phase two", the simplex algorithm pivots between a sequence of basic feasible solutions so that the objective function is non-decreasing with each pivot, terminating when with an optimal solution (also finally finding a "dual feasible" solution).[3][11]

The criss-cross algorithm is simpler than the simplex algorithm, because the criss-cross algorithm only has one-phase. Its pivoting rules are similar to the least-index pivoting rule of Bland.[12] Bland's rule uses only signs of coefficients rather than their (real-number) order when deciding eligible pivots. Bland's rule selects an entering variables by comparing values of reduced costs, using the real-number ordering of the eligible pivots.[12][13] Unlike Bland's rule, the criss-cross algorithm is "purely combinatorial", selecting an entering variable and a leaving variable by considering only the signs of coefficients rather than their real-number ordering.[3][11] The criss-cross algorithm has been applied to furnish constructive proofs of basic results in real linear algebra, such as the lemma of Farkas.[14]

Description

The criss-cross algorithm has been specified in many publications and implemented in many programming languages.

Example

Examples showing the criss-cross algorithm on a problem-instance have been published.

Computational complexity: Worst and average cases

The time complexity of an algorithm counts the number of arithmetic operations sufficient for the algorithm to solve the problem. For example, Gaussian elimination requires on the order of D3 operations, and so it is said to have polynomial time-complexity, because its complexity is bounded by a cubic polynomial. There are examples of algorithms that do not have polynomial-time complexity. For example, a generalization of Gaussian elimination called Buchberger's algorithm has for its complexity an exponential function of the problem data (the degree of the polynomials and the number of variables of the multivariate polynomials). Because exponential functions eventually grow much faster than polynomial functions, an exponential complexity implies that an algorithm has slow performance on large problems.

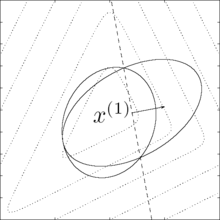

Several algorithms for linear programming—Khachiyan's ellipsoidal algorithm, Karmarkar's projective algorithm, and central-path algorithms—have polynomial time-complexity (in the worst case and thus on average). The ellipsoidal and projective algorithms were published before the criss-cross algorithm.

However, like the simplex algorithm of Dantzig, the criss-cross algorithm is not a polynomial-time algorithm for linear programming. Terlaky's criss-cross algorithm visits all the 2D corners of a (perturbed) cube in dimension D, according to a paper of Roos; Roos's paper modifies the Klee–Minty construction of a cube on which the simplex algorithm takes 2D steps).[3][4][5] Like the simplex algorithm, the criss-cross algorithm visits all 8 corners of the three-dimensional cube in the worst case.

When it is initialized at a random corner of the cube, the criss-cross algorithm visits only D additional corners, however, according to a 1994 paper by Fukuda and Namiki.[6][7] Trivially, the simplex algorithm takes on average D steps for a cube.[8][15] Like the simplex algorithm, the criss-cross algorithm visits exactly 3 additional corners of the three-dimensional cube on average.

Variants

The criss-cross algorithm has been extended to solve more general problems than linear programming problems.

Other optimization problems with linear constraints

There are variants of the criss-cross algorithm for linear programming, for quadratic programming, and for the linear-complementarity problem with "sufficient matrices";[3][6][16][17][18][19] conversely, for linear complementarity problems, the criss-cross algorithm terminates finitely only if the matrix is a sufficient matrix.[18][19] A sufficient matrix is a generalization both of a positive-definite matrix and of a P-matrix, whose principal minors are each positive.[18][19][20] The criss-cross algorithm has been adapted also for linear-fractional programming.[1][2]

Vertex enumeration

The criss-cross algorithm was used in an algorithm for enumerating all the vertices of a polytope, which was published by David Avis and Komei Fukuda in 1992.[21] Avis and Fukuda presented an algorithm which finds the v vertices of a polyhedron defined by a nondegenerate system of n linear inequalities in D dimensions (or, dually, the v facets of the convex hull of n points in D dimensions, where each facet contains exactly D given points) in time O(nDv) and O(nD) space.[22]

Oriented matroids

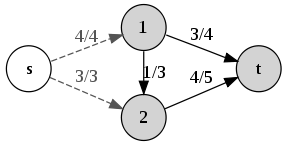

The max-flow min-cut theorem states that a network with the value of flow equal to the capacity of an s-t cut. This theorem can be proved using the criss-cross algorithm for oriented matroids.

The max-flow min-cut theorem states that a network with the value of flow equal to the capacity of an s-t cut. This theorem can be proved using the criss-cross algorithm for oriented matroids.The criss-cross algorithm is often studied using the theory of oriented matroids (OMs), which is a combinatorial abstraction of linear-optimization theory.[17][23] Indeed, Bland's pivoting rule was based on his previous papers on oriented-matroid theory. However, Bland's rule exhibits cycling on some oriented-matroid linear-programming problems.[17] The first purely combinatorial algorithm for linear programming was devised by Michael J. Todd.[17][24] Todd's algorithm was developed not only for linear-programming in the setting of oriented matroids, but also for quadratic-programming problems and linear-complementarity problems.[17][24] Todd's algorithm is complicated even to state, unfortunately, and its finite-convergence proofs are somewhat complicated.[17]

The criss-cross algorithm and its proof of finite termination can be simply stated and readily extend the setting of oriented matroids. The algorithm can be further simplified for linear feasibility problems, that is for linear systems with nonnegative variables; these problems can be formulated for oriented matroids.[14] The criss-cross algorithm has been adapted for problems that are more complicated than linear programming: There are oriented-matroid variants also for the quadratic-programming problem and for the linear-complementarity problem.[3][16][17]

Summary

The criss-cross algorithm is a simply stated algorithm for linear programming. It was the second fully combinatorial algorithm for linear programming. The partially combinatorial simplex algorithm of Bland cycles on some (nonrealizable) oriented matroids. The first fully combinatorial algorithm was published by Todd, and it is also like the simplex algorithm in that it preserves feasibility after the first feasible basis is generated; however, Todd's rule is complicated. The criss-cross algorithm is not a simplex-like algorithm, because it need not maintain feasibility. The criss-cross algorithm does not have polynomial time-complexity, however.

Researchers have extended the criss-cross algorithm for many optimization-problems, including linear-fractional programming. The criss-cross algorithm can solve quadratic programming problems and linear complementarity problems, even in the setting of oriented matroids. Even when generalized, the criss-cross algorithm remains simply stated.

See also

- Jack Edmonds (pioneer of combinatorial optimization and oriented-matroid theorist; doctoral advisor of Komei Fukuda)

Notes

- ^ a b Illés, Szirmai & Terlaky (1999)

- ^ a b Stancu-Minasian, I. M. (August 2006). "A sixth bibliography of fractional programming". Optimization 55 (4): 405–428. doi:10.1080/02331930600819613. MR2258634. http://www.informaworld.com/10.1080/02331930600819613.

- ^ a b c d e f g Fukuda & Terlaky (1997)

- ^ a b Roos (1990)

- ^ a b Klee, Victor; Minty, George J. (1972). "How good is the simplex algorithm?". In Shisha, Oved. Inequalities III (Proceedings of the Third Symposium on Inequalities held at the University of California, Los Angeles, Calif., September 1–9, 1969, dedicated to the memory of Theodore S. Motzkin). New York-London: Academic Press. pp. 159–175. MR332165.

- ^ a b c Fukuda & Terlaky (1997, p. 385)

- ^ a b Fukuda & Namiki (1994, p. 367)

- ^ a b The simplex algorithm takes on average D steps for a cube. Borgwardt (1987): Borgwardt, Karl-Heinz (1987). The simplex method: A probabilistic analysis. Algorithms and Combinatorics (Study and Research Texts). 1. Berlin: Springer-Verlag. pp. xii+268. ISBN 3-540-17096-0. MR868467.

- ^ Terlaky (1985) and Terlaky (1987)

- ^ Wang (1987)

- ^ a b Terlaky & Zhang (1993)

- ^ a b Bland, Robert G. (May 1977). "New finite pivoting rules for the simplex method". Mathematics of Operations Research 2 (2): 103–107. doi:10.1287/moor.2.2.103. JSTOR 3689647. MR459599.

- ^ Bland's rule is also related to an earlier least-index rule, which was proposed by Katta G. Murty for the linear complementarity problem, according to Fukuda & Namiki (1994).

- ^ a b Klafszky & Terlaky (1991)

- ^ More generally, for the simplex algorithm, the expected number of steps is proportional to D for linear-programming problems that are randomly drawn from the Euclidean unit sphere, as proved by Borgwardt and by Smale.

- ^ a b Fukuda & Namiki (1994)

- ^ a b c d e f g Björner, Anders; Las Vergnas, Michel; Sturmfels, Bernd; White, Neil; Ziegler, Günter (1999). "10 Linear programming". Oriented Matroids. Cambridge University Press. pp. 417–479. doi:10.1017/CBO9780511586507. ISBN 9780521777506. MR1744046. http://ebooks.cambridge.org/ebook.jsf?bid=CBO9780511586507.

- ^ a b c den Hertog, D.; Roos, C.; Terlaky, T. (1 July 1993). "The linear complementarity problem, sufficient matrices, and the criss-cross method" (pdf). Linear Algebra and its Applications 187: 1–14. doi:10.1016/0024-3795(93)90124-7. http://arno.uvt.nl/show.cgi?fid=72943.

- ^ a b c Csizmadia, Zsolt; Illés, Tibor (2006). "New criss-cross type algorithms for linear complementarity problems with sufficient matrices" (pdf). Optimization Methods and Software 21 (2): 247–266. doi:10.1080/10556780500095009. MR2195759. http://www.cs.elte.hu/opres/orr/download/ORR03_1.pdf.

- ^ Cottle, R. W.; Pang, J.-S.; Venkateswaran, V. (March–April 1989). "Sufficient matrices and the linear complementarity problem". Linear Algebra and its Applications 114–115: 231–249. doi:10.1016/0024-3795(89)90463-1. MR986877. http://www.sciencedirect.com/science/article/pii/0024379589904631.

- ^ Avis & Fukuda (1992, p. 297)

- ^ The v vertices in a simple arrangement of n hyperplanes in D dimensions can be found in O(n2Dv) time and O(nD) space complexity.

- ^ The theory of oriented matroids was initiated by R. Tyrrell Rockafellar. (Rockafellar 1969):

Rockafellar, R. T. (1969). "The elementary vectors of a subspace of RN (1967)". In R. C. Bose and T. A. Dowling. Combinatorial Mathematics and its Applications. The University of North Carolina Monograph Series in Probability and Statistics. Chapel Hill, North Carolina: University of North Carolina Press.. pp. 104–127. MR278972. PDF reprint. http://www.math.washington.edu/~rtr/papers/rtr-ElemVectors.pdf.

Rockafellar was influenced by the earlier studies of Albert W. Tucker and George J. Minty. Tucker and Minty had studied the sign patterns of the matrices arising through the pivoting operations of Dantzig's simplex algorithm.

- ^ a b Todd, Michael J. (1985). "Linear and quadratic programming in oriented matroids". Journal of Combinatorial Theory. Series B 39 (2): 105–133. doi:10.1016/0095-8956(85)90042-5. MR811116.

References

- Avis, David; Fukuda, Komei (December 1992). "A pivoting algorithm for convex hulls and vertex enumeration of arrangements and polyhedra". Discrete and Computational Geometry 8 (ACM Symposium on Computational Geometry (North Conway, NH, 1991)): 295–313. doi:10.1007/BF02293050. MR1174359. http://www.springerlink.com/content/m7440v7p3440757u/.

- Csizmadia, Zsolt; Illés, Tibor (2006). "New criss-cross type algorithms for linear complementarity problems with sufficient matrices" (pdf). Optimization Methods and Software 21 (2): 247–266. doi:10.1080/10556780500095009. MR2195759. http://www.cs.elte.hu/opres/orr/download/ORR03_1.pdf.

- Fukuda, Komei; Namiki, Makoto (March 1994). "On extremal behaviors of Murty's least index method". Mathematical Programming 64 (1): 365–370. doi:10.1007/BF01582581. MR1286455.

- Fukuda, Komei; Terlaky, Tamás (1997). Liebling, Thomas M.; de Werra, Dominique. eds. "Criss-cross methods: A fresh view on pivot algorithms". Mathematical Programming: Series B (Amsterdam: North-Holland Publishing Co.) 79 (Papers from the 16th International Symposium on Mathematical Programming held in Lausanne, 1997): 369–395. doi:10.1016/S0025-5610(97)00062-2. MR1464775. Postscript preprint.

- den Hertog, D.; Roos, C.; Terlaky, T. (1 July 1993). "The linear complementarity problem, sufficient matrices, and the criss-cross method" (pdf). Linear Algebra and its Applications 187: 1–14. doi:10.1016/0024-3795(93)90124-7. MR1221693. http://arno.uvt.nl/show.cgi?fid=72943.

- Illés, Tibor; Szirmai, Ákos; Terlaky, Tamás (1999). "The finite criss-cross method for hyperbolic programming". European Journal of Operational Research 114 (1): 198–214. doi:10.1016/S0377-2217(98)00049-6. Zbl 0953.90055. Postscript preprint. http://www.sciencedirect.com/science/article/B6VCT-3W3DFHB-M/2/4b0e2fcfc2a71e8c14c61640b32e805a.

- Klafszky, Emil; Terlaky, Tamás (June 1991). "The role of pivoting in proving some fundamental theorems of linear algebra". Linear Algebra and its Applications 151: 97–118. doi:10.1016/0024-3795(91)90356-2. MR1102142. http://www.cas.mcmaster.ca/~terlaky/files/pivot-la.ps.

- Roos, C. (1990). "An exponential example for Terlaky's pivoting rule for the criss-cross simplex method". Mathematical Programming. Series A 46 (1). doi:10.1007/BF01585729. MR1045573.

- Terlaky, T. (1985). "A convergent criss-cross method". Optimization: A Journal of Mathematical Programming and Operations Research 16 (5): 683–690. doi:10.1080/02331938508843067. ISSN 0233-1934. MR798939.

- Terlaky, Tamás (1987). "A finite crisscross method for oriented matroids". Journal of Combinatorial Theory. Series B 42 (3): 319–327. doi:10.1016/0095-8956(87)90049-9. ISSN 0095-8956. MR888684.

- Terlaky, Tamás; Zhang, Shu Zhong (1993). "Pivot rules for linear programming: A Survey on recent theoretical developments". Annals of Operations Research (Springer Netherlands) 46–47 (Degeneracy in optimization problems): 203–233. doi:10.1007/BF02096264. ISSN 0254-5330. MR1260019. PDF file of (1991) preprint.

- Wang, Zhe Min (1987). "A finite conformal-elimination free algorithm over oriented matroid programming". Chinese Annals of Mathematics (Shuxue Niankan B Ji). Series B 8 (1): 120–125. ISSN 0252-9599. MR886756.

External links

- Komei Fukuda (ETH Zentrum, Zurich) with publications

- Tamás Terlaky (Lehigh University) with publications

Complementarity problems and algorithms Complementarity Problems Basis-exchange algorithms Optimization: Algorithms, methods, and heuristics Unconstrained nonlinear: Methods calling ... ... and gradients... and HessiansConstrained nonlinear GeneralDifferentiableConvex minimization GeneralBasis-exchangeCombinatorial ParadigmsApproximation algorithm · Dynamic programming · Greedy algorithm · Integer programming (Branch & bound or cut)Graph algorithmsMetaheuristics Categories (Algorithms · Methods · Heuristics) · Software Categories:- Linear programming

- Oriented matroids

- Combinatorial optimization

- Optimization algorithms

- Combinatorial algorithms

- Geometric algorithms

- Exchange algorithms

Wikimedia Foundation. 2010.