- Nonlinear conjugate gradient method

-

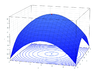

In numerical optimization, the nonlinear conjugate gradient method generalizes the conjugate gradient method to nonlinear optimization. For a quadratic function

:

:The minimum of f is obtained when the gradient is 0:

-

.

.

Whereas linear conjugate gradient seeks a solution to the linear equation

, the nonlinear conjugate gradient method is generally used to find the local minimum of a nonlinear function using its gradient

, the nonlinear conjugate gradient method is generally used to find the local minimum of a nonlinear function using its gradient  alone. It works when the function is approximately quadratic near the minimum, which is the case when the function is twice differentiable at the minimum.

alone. It works when the function is approximately quadratic near the minimum, which is the case when the function is twice differentiable at the minimum.Given a function

of N variables to minimize, its gradient

of N variables to minimize, its gradient  indicates the direction of maximum increase. One simply starts in the opposite (steepest descent) direction:

indicates the direction of maximum increase. One simply starts in the opposite (steepest descent) direction:with an adjustable step length

and performs a line search in this direction until it reaches the minimum of

and performs a line search in this direction until it reaches the minimum of  :

:-

,

,

After this first iteration in the steepest direction

, the following steps constitute one iteration of moving along a subsequent conjugate direction

, the following steps constitute one iteration of moving along a subsequent conjugate direction  , where

, where  :

:- Calculate the steepest direction:

,

, - Compute

according to one of the formulas below,

according to one of the formulas below, - Update the conjugate direction:

- Perform a line search: optimize

,

,  ,

, - Update the position:

,

,

With a pure quadratic function the minimum is reached within N iterations (excepting roundoff error), but a non-quadratic function will make slower progress. Subsequent search directions lose conjugacy requiring the search direction to be reset to the steepest descent direction at least every N iterations, or sooner if progress stops. However resetting every iteration turns the method into steepest descent. The algorithm stops when it finds the minimum, determined when no progress is made after a direction reset (i.e. in the steepest descent direction), or when some tolerance criterion is reached.

Within a linear approximation, the parameters

and

and  are the same as in the linear conjugate gradient method but have been obtained with line searches. The conjugate gradient method can follow narrow (ill-conditioned) valleys where the steepest descent method slows down and follows a criss-cross pattern.

are the same as in the linear conjugate gradient method but have been obtained with line searches. The conjugate gradient method can follow narrow (ill-conditioned) valleys where the steepest descent method slows down and follows a criss-cross pattern.Three of the best known formulas for

are titled Fletcher-Reeves (FR), Polak-Ribière (PR), and Hestenes-Stiefel (HS) after their developers. They are given by the following formulas:

are titled Fletcher-Reeves (FR), Polak-Ribière (PR), and Hestenes-Stiefel (HS) after their developers. They are given by the following formulas:- Fletcher–Reeves:

- Polak–Ribière:

- Hestenes-Stiefel:

-

.

.

These formulas are equivalent for a quadratic function, but for nonlinear optimization the preferred formula is a matter of heuristics or taste. A popular choice is

![\displaystyle \beta=\max [ 0,\beta^{PR} ]](f/79f165ba9d69836765d0801eec556f89.png) which provides a direction reset automatically.

which provides a direction reset automatically.

Newton based methods - Newton-Raphson Algorithm, Quasi-Newton methods (e.g., BFGS method) - tend to converge in fewer iterations, although each iteration typically requires more computation than a conjugate gradient iteration as Newton-like methods require computing the Hessian (matrix of second derivatives) in addition to the gradient. Quasi-Newton methods also require more memory to operate (see also the limited memory L-BFGS method).External links

- An Introduction to the Conjugate Gradient Method Without the Agonizing Pain by Jonathan Richard Shewchuk.

- Numerical Recipes in C - The Art of Scientific Computing, chapter 10, section 6: Conjugate Gradient Methods in Multidimensions; William H. Press (Editor), Saul A. Teukolsky (Editor), William T. Vetterling (Author), Brian P. Flannery (Author), Cambridge University Press; 2nd edition (1992).

Optimization: Algorithms, methods, and heuristics Unconstrained nonlinear: Methods calling ... ... and gradients... and HessiansConstrained nonlinear GeneralDifferentiableConvex minimization GeneralBasis-exchangeCombinatorial ParadigmsApproximation algorithm · Dynamic programming · Greedy algorithm · Integer programming (Branch & bound or cut)Graph algorithmsMetaheuristics Categories (Algorithms · Methods · Heuristics) · Software Categories:- Optimization methods

- Gradient methods

-

Wikimedia Foundation. 2010.