- Climateprediction.net

-

Climateprediction.net

Developer(s) Oxford University Initial release September 12, 2003 Development status Active Operating system Cross-platform Platform BOINC Website climateprediction.net Average performance 32.6 TFLOPS [1] Active users 20,274 Total users 252,234 Active hosts 27,527 Total hosts 507,912 Climateprediction.net, or CPDN, is a distributed computing project to investigate and reduce uncertainties in climate modelling. It aims to do this by running hundreds of thousands of different models (a large climate ensemble) using the donated idle time of ordinary personal computers, thereby leading to a better understanding of how models are affected by small changes in the many parameters known to influence the global climate.[2]

The project relies on the volunteer computing model using the BOINC framework where voluntary participants agree to run some processes of the project at the client-side in their personal computers after receiving tasks from the server-side for treatment.

CPDN, which is run primarily by Oxford University in England, has harnessed more computing power and generated more data than any other climate modelling project.[3] It has produced over 100 million model years of data so far.[4] As of December 2010[update], there are more than 32,000 active participants from 147 countries with a total BOINC credit of more than 14 billion, reporting about 90 teraflops (90 trillion operations per second) of processing power.[5]

Contents

Aims

The aim of the Climateprediction.net project is to investigate the uncertainties in various parameterizations that have to be made in state-of-the-art climate models.[6] The model is run thousands of times with slight perturbations to various physics parameters (a 'large ensemble') and the project examines how the model output changes. These parameters are not known exactly, and the variations are within what is subjectively considered to be a plausible range. This will allow the project to improve understanding of how sensitive the models are to small changes and also to things like changes in carbon dioxide and sulphur cycle. In the past, estimates of climate change have had to be made using one or, at best, a very small ensemble (tens rather than thousands) of model runs. By using participants' computers, the project will be able to improve understanding of, and confidence in, climate change predictions more than would ever be possible using the supercomputers currently available to scientists.

The Climateprediction.net experiment should help to "improve methods to quantify uncertainties of climate projections and scenarios, including long-term ensemble simulations using complex models", identified by the Intergovernmental Panel on Climate Change (IPCC) in 2001 as a high priority. Hopefully, the experiment will give decision makers a better scientific basis for addressing one of the biggest potential global problems of the 21st century.

As shown in the graph above, the various models have a fairly wide distribution of results over time. For each curve, on the far right, there is a bar showing the final temperature range for the corresponding model version. As you can see and would expect, the further into the future the model is extended, the wider the variances between them. Roughly half of the variation depends on the future climate forcing scenario rather than uncertainties in the model. Any reduction in those variations whether from better scenarios or improvements in the models are wanted. Climateprediction.net is working on model uncertainties not the scenarios.

The crux of the problem is that scientists can run models and see that x% of the models warm y degrees in response to z climate forcings, but how do we know x% is a good representation of the probability of that happening in the real world? The answer is that scientists are uncertain about this and want to improve the level of confidence that can be achieved. Some models will be good and some poor at producing past climate when given past climate forcings and initial conditions (a hindcast). It does make sense to trust the models that do well at recreating the past more than those that do poorly. Therefore models that do poorly will be downweighted.[2][7]

The experiments

The different models that Climateprediction.net has and will distribute are detailed below in chronological order. Therefore, anyone who has joined recently is likely to be running the Transient Coupled Model.

- Classic Slab Model - The original experiment not under BOINC. See #The original model for further details. This model remains in use solely for the OU short course.[8]

- BOINC Slab Model - The same as the classic Slab Model, but released under BOINC.

- ThermoHaline Circulation Model (THC) - An investigation of how the climate might change in the event of a decrease in the strength of the ThermoHaline Circulation. This experiment has now been closed to new participants as they have sufficient results. It was a four phase model totaling 60 model years. The first three phases were identical to the above Slab Models. The fourth phase imposed the effects of a 50% slowdown in the Thermohaline circulation by imposing SST changes in the north Atlantic derived from other runs.[9]

- Sulfur Cycle Model - An investigation of the effect of sulfate aerosols on the climate. The experiment will model sulfur in a number of compound forms including dimethyl sulfide and sulfate aerosols.[10] This experiment started in August 2005 and was a pre-requirement for the Hindcast. It is a 5 phase model totalling 75 model years. Timesteps are around 70% longer, making the model around 2.8 times longer than the initial slab model. [11] While a few models are still tricking, model have not been issued since 2006.[12]

- Coupled Spin-Up Model - Inclusion of oceanic influences into the basic model in a more dynamic and realistic way than the initial Slab Model. This was a pre-requirement for the Hindcast. This has been completed and, as planned, was not publicly released. The fastest 200 - 500 computers were invited to join because it is a 200 year model and results were needed by February 2006 for the Transient coupled model launch.

- Transient coupled model - This comprises an 80 year Hindcast and an 80 year Forecast. The Hindcast is to test how well the models perform at recreating the climate of 1920 to 2000.[13] It was launched February 2006 under BBC Climate Change Experiment branding and later also released from the CPDN site.

- Seasonal Attribution Project - This is a high resolution model for a single model year to look at extreme precipitation events. This experiment is much shorter due to its single model year, but there are 13.5 times as many cells and timesteps are only 10 minutes instead of 30 minutes. This extra resolution means it requires at least 1.5 Gigabytes of RAM. It uses the HadAM3-N144 climate model.[14]

History

Myles Allen first thought about the need for large Climate ensembles in 1997, but was only introduced to the success of SETI@home in 1999. The first funding proposal in April 1999 was rejected as utterly unrealistic.

Following a presentation at the World Climate Conference in Hamburg in September 1999 and a commentary in Nature entitled Do it yourself climate prediction[15] in October 1999, thousands signed up to this supposedly imminently available program. The Dot-com bubble bursting did not help and the project realised they would have to do most of the programming themselves rather than outsourcing.

It was launched September 12, 2003 and on September 13, 2003 the project exceeded the capacity of the Earth Simulator to become the world's largest climate modelling facility.

The 2003 launch only offered a Windows "classic" client. On 26 August 2004 a BOINC client was launched which supported Windows, Linux and Mac OS X clients. "Classic" will continue to be available for a number of years in support of the Open University course. BOINC has stopped distributing classic models in favour of sulfur cycle models. A more user friendly BOINC client and website called GridRepublic, which supports climateprediction and other BOINC projects, was released in beta in 2006.

A thermohaline circulation slowdown experiment was launched in May 2004 under the classic framework to coincide with the film The Day After Tomorrow. This program can still be run but is no longer downloadable. The scientific analysis has been written up in Nick Faull's thesis. A paper about the thesis is still to be completed. There is no further planned research with this model.

A sulfur cycle model was launched in August 2005. They took longer to complete than the original models as a result of having five phases instead of three. Each timestep was also more complicated.

By November 2005, the number of completed results totalled 45,914 classic models, 3,455 thermohaline models, 85,685 BOINC models and 352 sulfur cycle models. This represented over 6 million model years processed.

In February 2006, the project moved on to more realistic climate models. A BBC Climate Change Experiment[16] was launched, attracting around 23,000 participants on the first day. The transient climate simulation introduced realistic oceans. This allowed the experiment to investigate changes in the climate response as the climate forcings are changed, rather than an equilibrium response to a significant change like doubling the carbon dioxide level. Therefore, the experiment has now moved on to doing a hindcast of 1920 to 2000 as well as a forecast of 2000 to 2080. This model takes much longer.

The BBC gave the project publicity with over 120,000 participating computers in the first three weeks.

In March 2006, a high resolution model was released as another project, the Seasonal Attribution Project.

In April 2006, the coupled models were found to have a data input problem. The work was useful for a different purpose than advertised. New models had to be handed out.[17][18]

Results to date

The first results of the experiment were published in Nature in January 2005 and show that with only slight changes to the parameters within plausible ranges, the models can show climate sensitivities ranging from less than 2 °C to more than 11 °C (see [19] and explanation[20]). The higher climate sensitivities have been challenged as implausible. For example by Gavin Schmidt (a climate modeler with the NASA Goddard Institute for Space Studies in New York).[21]

Explanation

Climate sensitivity is defined as the equilibrium response of global mean temperature to doubling levels of carbon dioxide. Current levels of carbon dioxide are around 380 ppm and growing at a rate of 1.8 ppm per year compared with preindustrial levels of 280 ppm.

Climate sensitivities of greater than 5 °C are widely accepted as being catastrophic.[citation needed] The possibility of such high sensitivities being plausible given observations had been reported prior to the Climateprediction.net experiment but "this is the first time GCMs have produced such behaviour".[who?]

Even the models with very high climate sensitivity were found to be "as realistic as other state-of-the-art climate models". The test of realism was done with a root mean square error test. This does not check on realism of seasonal changes and it is possible that more diagnostic measures may place stronger constraints on what is realistic. Improved realism tests are being developed.

It is important to the experiment and the goal of obtaining a probability distribution function (pdf) of climate outcomes to get a very wide range of behaviours even if only to rule out some behaviours as unrealistic. Unless a very large number of data points are used to create the pdf, the pdf is not reliable. Therefore, models with climate sensitivities as high as 11 °C are included even though they are inaccurate. More worrying is the lack of models with climate sensitivity of less than 2 °C. The sulfur cycle experiment is likely to extend the range downwards.

Piani et al. (2005)

Published in Geophysical Review Letters, this paper concludes:

When an internally consistent representation of the origins of model-data discrepancy is used to calculate the probability density function of climate sensitivity, the 5th and 95th percentiles are 2.2 K and 6.8 K respectively. These results are sensitive, particularly the upper bound, to the representation of the origins of model data discrepancy.[22]

Use in education

There is an Open University short course[23] and teaching material[24] available for schools to teach subjects relating to climate and climate modelling. There is also teaching material available for use in Key Stage 3/4 Science, A level Physics (Advanced Physics), Key Stage 3/4 Mathematics, Key Stage 3/4 Geography, 21st Century Science, Science for Public Understanding, Use of Mathematics, Primary.

The original model

The original experiment is run with HadSM3, which is the atmosphere from the HadCM3 model but with only a "slab" ocean rather than a full dynamic ocean. This is faster (and requires less memory) than the full model, but lacks dynamical feedbacks from the ocean, which are incorporated into the full coupled-ocean-atmosphere models used to make projections of climate change out to 2100.

Each downloaded model comes with a slight variation in the various model parameters.

There is an initial "calibration phase" of 15 model years in which the model calculates the "flux correction"; extra ocean-atmosphere fluxes that are needed to keep the model ocean in balance (the model ocean does not include currents; these fluxes to some extent replace the heat that would be transported by the missing currents).

Then there is a "control phase" of 15 years in which the ocean temperatures are allowed to vary. The flux correction ought to keep the model stable, but feedbacks developed in some of the runs. There is a quality control check, based on the annual mean temperatures, and models which fail this check are discarded.

Then there is a "double CO2 phase" in which the CO2 content is instantaneously doubled and the model run for a further 15 years, which in some cases is not quite sufficient model time to settle down to a new (warmer) equilibrium. In this phase some models which produced physically unrealistic results were again discarded.

The quality control checks in the control and 2*CO2 phases were quite weak: they suffice to exclude obviously unphysical models but do not include (for example) a test of the simulation of the seasonal cycle; hence some of the models passed may still be unrealistic. Further quality control measures are being developed.

The temperature in the doubled CO2 phase is exponentially extrapolated to work out the equilibrium temperature. Difference in temperature between this and the control phase then gives a measure of the climate sensitivity of that particular version of the model.

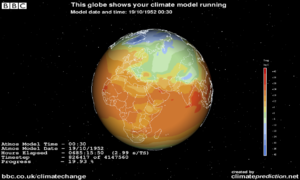

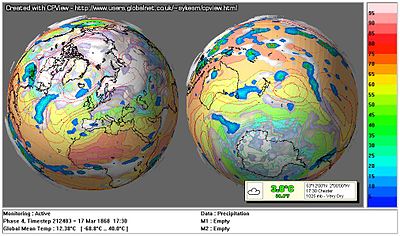

Visualisations

Most distributed computing projects have screensavers to visually indicate the activity of the application, but they do not usually show its results as they are being calculated. By contrast, climateprediction.net not only uses a built-in visualisation to show the climate of the world being modelled, but it is interactive which allows different aspects of climate (temperature, rainfall, etc.) to be displayed. In addition, there are other, more advanced visualisation programs that allow the user to see more of what the model is doing (usually by analysing previously generated results) and to compare different runs and models.

Unfortunately as of December 2008 there is no visualisation tool that works with the newer CPDN models. Neither CPView nor Advanced Visualisation were updated so far to display data gathered from those models. So users can only visualize the data through the screensaver.

The real-time desktop visualisation for the model launched in 2003 was developed,[25] by NAG, enabling users to track the progress of their simulation as the cloud cover and temperature changes over the surface of the globe. Other, more advanced visualisation programs in use include CPView and IDL Advanced Visualisation. They have similar functionality. CPView was written by Martin Sykes, a participant in the experiment. The IDL Advanced Visualisation was written by Andy Heaps of the University of Reading (UK), and modified to work with the BOINC version by Tesella Support Services plc.

Only CPView allows you to look at unusual diagnostics, rather than the usual Temperature, Pressure, Rainfall, Snow, and Clouds.[26] Up to 5 sets of data can be displayed on a map. It also has a wider range of functions like Max, Min, further memory functions, and other features.

The Advanced Visualisation has functions for graphs of local areas and over 1 day, 2 days, and 7 days, as well as the more usual graphs of season and annual averages (which both packages do). There are also Latitude - Height plots and Time - Height plots.

The download size is much smaller for CPView and CPView works with Windows 98. Running the visualisation/screensaver may slow down the processing and is not recommended to be used.[citation needed]

See also

- List of distributed computing projects

- Climate model

- Global climate model

- Distributed computing

- BOINC

- Climate ensemble

- Sensitivity analysis and Uncertainty analysis

References

- ^ de Zutter W. "Climate Prediction: Credit overview". boincstats.com. http://boincstats.com/stats/project_graph.php?pr=cpdn. Retrieved 2011-11-09.

- ^ a b "About the project". Climateprediction.net. http://climateprediction.net/content/about-climatepredictionnet-project. Retrieved 2011-02-20.

- ^ "BBC quote of Nick Faull". Bbc.co.uk. 2007-01-21. http://www.bbc.co.uk/sn/climateexperiment/theexperiment/abouttheexperiment.shtml. Retrieved 2011-02-20.

- ^ "Climate prediction.net". tessella.com. http://www.climateprediction.net. Retrieved 2010-12-13.

- ^ "Detailed user, host, team and country statistics with graphs for BOINC". boincstats.com. http://boincstats.com/stats/project_graph.php?pr=cpdn. Retrieved 2010-12-13.

- ^ "Modelling The Climate". Climateprediction.net. http://www.climateprediction.net/science/model-intro.php. Retrieved 2011-02-20.

- ^ BOINC Wiki Science Introduction which has been edited by Dave Frame[dead link]

- ^ [1][dead link]

- ^ "About THC". Climateprediction.net. http://www.climateprediction.net/science/thc_about.php. Retrieved 2011-02-20.

- ^ "gateway". Climateprediction.net. http://www.climateprediction.net/science/s-cycle.php. Retrieved 2011-02-20.

- ^ "Sulfur Cycle". Climateprediction.net. http://www.climateprediction.net/science/s-cycle.php. Retrieved 2011-02-20.

- ^ "Project Stats". Climateapps2.oucs.ox.ac.uk. http://climateapps2.oucs.ox.ac.uk/cpdnboinc/proj_stat.php. Retrieved 2011-02-20.

- ^ "Strategy - see experiment 2". Climateprediction.net. http://www.climateprediction.net/science/strategy.php. Retrieved 2011-02-20.

- ^ SAP - About[dead link]

- ^ http://www.climateprediction.net/science/pubs/nature_allen_141099.pdf

- ^ "climateprediction.net - BBC Climate Change Experiment". Bbc.cpdn.org. 2007-05-20. http://bbc.cpdn.org/. Retrieved 2011-02-20.

- ^ by myles » Wed Apr 19, 2006 4:20 pm (2006-04-19). "Message Board • View topic - Message From Principal Investigator Re: April 2006 Problem". Climateprediction.net. http://www.climateprediction.net/board/viewtopic.php?t=4762. Retrieved 2011-02-20.

- ^ "Potted History" (PDF). http://www.climateprediction.net/science/pubs/nerc2005allen.pdf. Retrieved 2011-02-20.

- ^ Stainforth, D. A.; Aina, T.; Christensen, C.; Collins, M.; Faull, N.; Frame, D. J.; Kettleborough, J. A.; Knight, S. et al. (2005). "Uncertainty in predictions of the climate response to rising levels of greenhouse gases". Nature 433 (7024): 403–406. doi:10.1038/nature03301. PMID 15674288. http://www.climateprediction.net/science/pubs/nature_first_results.pdf.

- ^ http://www.boinc-wiki.ath.cx/index.php?title=Explanation_of_the_Nature_Journal_-_First_CPDN_Results

- ^ "Climate Less Sensitive to Greenhouse Gases Than Predicted, Study Says". News.nationalgeographic.com. 2010-10-28. http://news.nationalgeographic.com/news/2006/04/0419_060419_global_warming_2.html. Retrieved 2011-02-20.

- ^ Piani, C.; Frame, D. J.; Stainforth, D. A.; Allen, M. R. (2005). "Constraints on climate change from a multi-thousand member ensemble of simulations". Geophysical Research Letters 32 (23). doi:10.1029/2005GL024452. http://climateprediction.net/science/pubs/Piani_GRL.pdf.

- ^ [2][dead link]

- ^ http://climateprediction.net/schools/resources.php

- ^ http://www.climateprediction.net/science/pubs/tr1_06.pdf

- ^ "Data Index". Users.globalnet.co.uk. 2004-08-17. http://www.users.globalnet.co.uk/~sykesm/cpviewdataindex.html. Retrieved 2011-02-20.

External links

- ClimatePrediction.net Website

- Berkeley Open Infrastructure for Network Computing (BOINC)

- BOINC Wiki

- GridRepublic

- Statistics for ClimatePrediction.net

- Volunteer@Home.com — All about volunteer computing

- Video of the screensaver.

- BBC News story (retrieved 14 February 2006)

Berkeley Open Infrastructure for Network Computing (BOINC) distributed computing projects Current projects ABC@Home · Astropulse · CAS@home · Climateprediction.net · DistrRTgen · Einstein@Home · Enigma@Home · FreeHAL · Ibercivis · Lattice Project · Leiden Classical · LHC@home · Malaria Control Project · μFluids@Home · MilkyWay@home · NFS@Home · Orbit@home · PrimeGrid · Quake-Catcher Network · Rosetta@home · Seasonal Attribution Project · SETI@home · SIMAP · SZTAKI Desktop Grid · World Community GridBeta projects BURP · CPDN Beta · Collatz Conjecture · Constellation (platform) · Cosmology@Home · Docking@Home · EDGeS@Home · GPUGRID.net · Ibercivis · Lattice Project · MindModeling@Home · POEM@Home · QMC@Home · Renderfarm.fi · RNA World · SETI@home beta · Spinhenge@Home · Superlink@Technion · Test4Theory · WEP-M+2 Project · Yoyo@homeAlpha projects AlmereGrid Boinc Grid · Biochemical Library · CAS@home · Chess960@home · Correlizer · DistributedDataMining · DNA@Home · DrugDiscovery@Home · eOn · Goldbach's Conjecture Project · Hydrogen@Home · Magnetism@home · Mersenne@home · Moo! Wrapper · NFS@Home · NumberFields@home · Pirates@home · Primaboinca · QuantumFIRE · RADIOACTIVE@HOME · RALPH@home · Renderfarm.fi · RSA Lattice Siever · SAT@home · SLinCA@Home · Sudoku@vtaiwan · Surveill@Home · Virtual Prairie · Volpex · VTU@Home · WUProp@Home · YAFUFuture projects Tools and technology Ended / Non-active projects 3x+1@Home · APS@Home · AQUA@home · Artificial Intelligence System · BBC Climate Change Experiment · BRaTS@Home · Cell Computing · Cels@Home · DepSpid · DNETC@HOME · DynaPing · Eternity2.net · Genetic Life · HashClash · Nano-Hive@Home · NQueens@home · Predictor@home · Proteins@home · Ramsey@Home · Rectilinear Crossing Number · Reversi · Riesel Sieve · RND@home · SciLINC · SHA-1 Collision Search Graz · Sudoku project · TANPAKU · Virus Respiratorio Sincitial · XtremLabAtmospheric, oceanographic and climate models Model types Specific models ClimateGlobal weatherRegional and mesoscale atmosphericRegional and mesoscale oceanographicHyCOM · ROMSAtmospheric dispersionNAME · MEMO · OSPM · ADMS 3 · ATSTEP · AUSTAL2000 · CALPUFF · DISPERSION21 · ISC3 · MERCURE · PUFF-PLUME · RIMPUFF · SAFE AIR · AERMODChemical transportLand surface parameterisationDiscontinuedCategories:- Berkeley Open Infrastructure for Network Computing projects

- Numerical climate and weather models

- Meteorology and climate education

Wikimedia Foundation. 2010.