- Massively parallel

-

Massively parallel is a description which appears in computer science, life sciences, medical diagnostics, and other fields.

A massively parallel computer is a distributed memory computer system which consists of many individual nodes, each of which is essentially an independent computer in itself, and in turn consists of at least one processor, its own memory, and a link to the network that connects all the nodes together. Such computer systems have many independent arithmetic units or entire microprocessors, that run in parallel. The term massive connotes hundreds if not thousands of such units. Nodes communicate by passing messages, using standards such as MPI.

In this class of computing, all of the processing elements are connected together to be one very large computer. This is in contrast to distributed computing where massive numbers of separate computers are used to solve a single problem.

Contents

Supercomputers

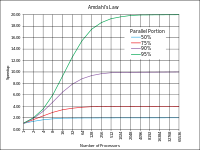

Nearly all supercomputers as of 2005 are massively parallel, with the largest having several hundred thousand CPUs. The cumulative output of the many constituent CPUs can result in large total peak FLOPS (FLoating point Operations Per Second) numbers. The true amount of computation accomplished depends on the nature of the computational task and its implementation. Some problems are more intrinsically able to be separated into parallel computational tasks than others. When problems depend on sequential stages of computation, some processors must remain idle while waiting for the result of calculations from other processors, resulting in less efficient performance. The efficient implementation of computational tasks on parallel computers is an active area of research. See also Parallel computing.

Through advances in Moore's Law, single-chip implementations of massively parallel processor arrays are becoming cost effective, and finding particular application in high performance embedded systems applications such as video compression. Examples include chips from Ambric, Coherent Logix, picoChip, and Tilera.

In medicine

In life science and medical diagnostics, massively parallel chemical reactions are used to reduce the time and cost of an analysis or synthesis procedure, often to provide ultra-high throughput. For example, in ultra-high-throughput DNA sequencing as introduced in August 2005 there may be 500,000 sequencing-by-synthesis operations occurring in parallel.[1]

Example systems

The earliest massively parallel processing systems all used serial computers as individual processing elements, in order to achieve the maximum number of independent units for a given size and cost. Some years ago many of the most powerful supercomputers were MPP systems. Early examples of such a system are the Distributed Array Processor (DAP), the Goodyear MPP, the Connection Machine, the Ultracomputer and all machines coming out from the ESPRIT 1085 project (1985), such as the Telmat MegaNode using Transputers.

See also

- Fifth generation computer systems project

- Massively parallel processor array

- Multiprocessing

- Parallel computing

- Process oriented programming

- Shared nothing architecture (SN)

- Symmetric multiprocessing (SMP)

References

- ^ Gilbert Kalb, Robert Moxley (1992). Massively Parallel, Optical, and Neural Computing in the United States. Moxley. ISBN 9051990979.

Parallel computing General

Levels Threads Theory Elements Coordination Multiprocessing · Multithreading (computer architecture) · Memory coherency · Cache coherency · Cache invalidation · Barrier · Synchronization · Application checkpointingProgramming Hardware Multiprocessor (Symmetric · Asymmetric) · Memory (NUMA · COMA · distributed · shared · distributed shared) · SMT

MPP · Superscalar · Vector processor · Supercomputer · BeowulfAPIs Ateji PX · POSIX Threads · OpenMP · OpenHMPP · PVM · MPI · UPC · Intel Threading Building Blocks · Boost.Thread · Global Arrays · Charm++ · Cilk · Co-array Fortran · OpenCL · CUDA · Dryad · DryadLINQProblems Embarrassingly parallel · Grand Challenge · Software lockout · Scalability · Race conditions · Deadlock · Livelock · Deterministic algorithm · Parallel slowdown

Category ·

Category ·  CommonsCategories:

CommonsCategories:- Parallel computing

- Massively parallel computers

Wikimedia Foundation. 2010.