- Cache coherence

-

In computing, cache coherence (also cache coherency) refers to the consistency of data stored in local caches of a shared resource.

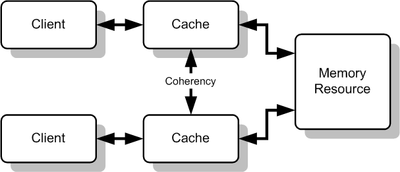

When clients in a system maintain caches of a common memory resource, problems may arise with inconsistent data. This is particularly true of CPUs in a multiprocessing system. Referring to the "Multiple Caches of Shared Resource" figure, if the top client has a copy of a memory block from a previous read and the bottom client changes that memory block, the top client could be left with an invalid cache of memory without any notification of the change. Cache coherence is intended to manage such conflicts and maintain consistency between cache and memory.

Coherency protocol

A coherency protocol is a protocol which maintains the consistency between all the caches in a system of distributed shared memory. The protocol maintains memory coherence according to a specific consistency model. Most coherency protocols in multiprocessors support the sequential consistency model (note: this statement is false, no modern high-performance system implements sequential consistency), while distributed shared memory systems typically support release consistency or weak consistency models.

Transitions between states in any specific implementation of these protocols may vary. For example, an implementation may choose different update and invalidation transitions such as update-on-read, update-on-write, invalidate-on-read, or invalidate-on-write. The choice of transition may affect the amount of inter-cache traffic, which in turn may affect the amount of cache bandwidth available for actual work. This should be taken into consideration in the design of distributed software that could cause strong contention between the caches of multiple processors.

Various models and protocols have been devised for maintaining cache coherence, such as MSI, MESI (aka Illinois protocol), MOSI, MOESI, MERSI, MESIF, write-once, Synapse, Berkeley, Firefly and Dragon protocol

Choice of the consistency model is crucial to designing a cache coherent system. Coherence models differ in performance and scalability; each must be evaluated for every system design.

Further reading

- Handy, Jim (1998). The Cache Memory Book. Academic Press, Inc.. ISBN 0-12-322980-4.

See also

Parallel computing General

Levels Threads Theory Elements Coordination Multiprocessing · Multithreading (computer architecture) · Memory coherency · Cache coherency · Cache invalidation · Barrier · Synchronization · Application checkpointingProgramming Hardware Multiprocessor (Symmetric · Asymmetric) · Memory (NUMA · COMA · distributed · shared · distributed shared) · SMT

MPP · Superscalar · Vector processor · Supercomputer · BeowulfAPIs Ateji PX · POSIX Threads · OpenMP · OpenHMPP · PVM · MPI · UPC · Intel Threading Building Blocks · Boost.Thread · Global Arrays · Charm++ · Cilk · Co-array Fortran · OpenCL · CUDA · Dryad · DryadLINQProblems Embarrassingly parallel · Grand Challenge · Software lockout · Scalability · Race conditions · Deadlock · Livelock · Deterministic algorithm · Parallel slowdown

Category ·

Category ·  CommonsCategories:

CommonsCategories:- Cache coherency

- Parallel computing

- Concurrent computing

- Consistency models

Wikimedia Foundation. 2010.