- Generation loss

-

Generation loss refers to the loss of quality between subsequent copies or transcodes of data. Anything that reduces the quality of the representation when copying, and would cause further reduction in quality on making a copy of the copy, can be considered a form of generation loss. File size increases are a common result of generation loss, as the introduction of artifacts may actually increase the entropy of the data through each generation.

Contents

Analog generation loss

In analog systems (including systems that use digital recording but make the copy over an analog connection), generation loss is mostly due to noise and bandwidth issues in cables, amplifiers, mixers, recording equipment and anything else between the source and the destination. Poorly adjusted distribution amplifiers and mismatched impedances can make these problems even worse. Repeated conversion between analog and digital can also cause loss.

Generation loss was a major consideration in complex analog audio and video editing, where multi-layered edits were often created by making intermediate mixes which were then "bounced down" back onto tape. Careful planning was required to minimize generation loss, and the resulting noise and poor frequency response.

One way of minimizing the number of generations needed was to use an audio mixing or video editing suite capable of mixing a large number of channels at once; in the extreme case, for example with a 48-track recording studio, an entire complex mixdown could be done in a single generation, although this was prohibitively expensive for all but the best-funded projects.

The introduction of professional analog noise reduction systems such as Dolby A helped reduce the amount of audible generation loss, but were eventually superseded by digital systems which vastly reduced generation loss.

Digital generation loss

Digital technology used correctly can eliminate generation loss. Copying a digital file gives an exact copy if the equipment is operating properly. This trait of digital technology has given rise to awareness of the risk of unauthorized copying. Before digital technology was widespread, a record label, for example, could rest easy in knowing that unauthorized copies of their music tracks were never as good as the originals.

Techniques that cause generation loss in digital systems

In digital systems, several techniques, used because of other advantages, may reintroduce generation loss and must be used with caution. However, copying a digital file itself incurs no generation loss — the copied file is identical to the original, provided a perfect copying channel is used.

Some digital transforms are reversible, while many are not: lossless compression is, by definition, fully reversible, while lossy compression throws away some data which cannot be restored. Similarly, many DSP processes are not reversible.

Thus careful planning of an audio or video signal chain from beginning to end and rearranging to minimize multiple conversions is important to avoid generation loss. Often, arbitrary choices of numbers of pixels and sampling rates for source, destination, and intermediates can seriously degrade digital signals in spite of the potential of digital technology for eliminating generation loss completely.

Similarly, when using lossy compression, it will ideally only be done once, at the end of the workflow involving the file, after all required changes have been made.

Transcoding

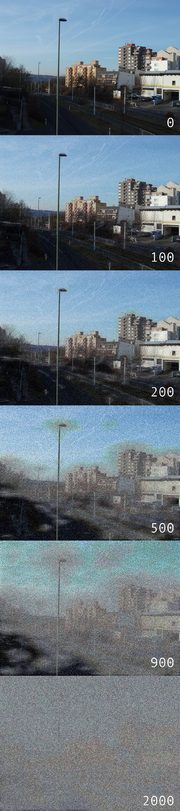

Converting between lossy formats – be it decoding and re-encoding to the same format, between different formats, or between different bitrates or parameters of the same format – causes generation loss.

Repeated applications of lossy compression and decompression can cause generation loss, particularly if the parameters used are not consistent across generations. Ideally an algorithm will be both idempotent, meaning that if the signal is decoded and then re-encoded with identical settings, there is no loss, and scalable, meaning that if it is re-encoded with lower quality settings, the result will be the same as if it had been encoded from the original signal – see Scalable Video Coding. More generally, transcoding between different parameters of a particular encoding will ideally yield the greatest common shared quality – for instance, converting from an image with 4 bits of red and 8 bits of green to one with 8 bits of red and 4 bits of green would ideally yield simply an image with 4 bits of red color depth and 4 bits of green color depth without further degradation.

Some lossy compression algorithms are much worse than others in this regard, being neither idempotent nor scalable, and introducing further degradation if parameters are changed.

For example, with JPEG, changing the quality setting will cause different quantization constants to be used, causing additional loss. Further, as JPEG is divided into 16×16 blocks (or 16×8, or 8×8, depending on chroma subsampling), cropping that does not fall on an 8×8 boundary shifts the encoding blocks, causing substantial degradation – similar problems happen on rotation. This can be avoided by the use of jpegtran or similar tools for cropping. Similar degradation occurs if video keyframes do not line up from generation to generation.

Editing

Digital resampling such as image scaling, and other DSP techniques can also introduce artifacts or degrade signal-to-noise ratio (S/N ratio) each time they are used, even if the underlying storage is lossless.

Resampling causes aliasing, both blurring low-frequency components and adding high-frequency noise, causing jaggies, while rounding off computations to fit in finite precision introduces quantization, causing banding; if fixed by dither, this instead becomes noise. In both cases, these at best degrading the signal's S/N ratio, and may cause artifacts. Quantization can be reduced by using high precision while editing (notably floating point numbers), only reducing back to fixed precision at the end.

Often, particular implementations fall short of theoretical ideals.

See also

- Signal-to-noise ratio

- Editing digital images

- Lossless data compression

Categories:

Wikimedia Foundation. 2010.