- Characteristic function (probability theory)

-

In probability theory and statistics, the characteristic function of any random variable completely defines its probability distribution. Thus it provides the basis of an alternative route to analytical results compared with working directly with probability density functions or cumulative distribution functions. There are particularly simple results for the characteristic functions of distributions defined by the weighted sums of random variables.

In addition to univariate distributions, characteristic functions can be defined for vector- or matrix-valued random variables, and can even be extended to more generic cases.

The characteristic function always exists when treated as a function of a real-valued argument, unlike the moment-generating function. There are relations between the behavior of the characteristic function of a distribution and properties of the distribution, such as the existence of moments and the existence of a density function.

Contents

Introduction

The characteristic function provides an alternative way for describing a random variable. Similarly to the cumulative distribution function

![F_X(x) = \operatorname{E}[\,\mathbf{1}_{\{X\leq x\}}\,]](1/7d1ee33fed7aa1323b22993e711ac0cf.png) (where 1{X ≤ x} is the indicator function — it is equal to 1 when X ≤ x, and zero otherwise)

(where 1{X ≤ x} is the indicator function — it is equal to 1 when X ≤ x, and zero otherwise)

which completely determines behavior and properties of the probability distribution of the random variable X, the characteristic function

also completely determines behavior and properties of the probability distribution of the random variable X. The two approaches are equivalent in the sense that knowing one of the functions it is always possible to find the other, yet they both provide different insight for understanding the features of the random variable. However, in particular cases, there can be differences in whether these functions can be represented as expressions involving simple standard functions.

If a random variable admits a density function, then the characteristic function is its dual, in the sense that each of them is a Fourier transform of the other. If a random variable has a moment-generating function, then the domain of the characteristic function can be extended to the complex plane, and

Note however that the characteristic function of a distribution always exists, even when the probability density function or moment-generating function do not.

The characteristic function approach is particularly useful in analysis of linear combinations of independent random variables. Another important application is to the theory of the decomposability of random variables.

Definition

For a scalar random variable X the characteristic function is defined as the expected value of eitX, where i is the imaginary unit, and t ∈ R is the argument of the characteristic function:

Here FX is the cumulative distribution function of X, and the integral is of the Riemann–Stieltjes kind. If random variable X has a probability density function ƒX, then the characteristic function is its Fourier transform,[2] and the last formula in parentheses is valid.

It should be noted though, that this convention for the constants appearing in the definition of the characteristic function differs from the usual convention for the Fourier transform.[3] For example some authors[4] define φX(t) = Ee−2πitX, which is essentially a change of parameter. Other notation may be encountered in the literature:

as the characteristic function for a probability measure p, or

as the characteristic function for a probability measure p, or  as the characteristic function corresponding to a density ƒ.

as the characteristic function corresponding to a density ƒ.The notion of characteristic functions generalizes to multivariate random variables and more complicated random elements. The argument of the characteristic function will always belong to the continuous dual of the space where random variable X takes values. For common cases such definitions are listed below:

- If X is a k-dimensional random vector, then for t ∈ Rk

- If X is a k×p-dimensional random matrix, then for t ∈ Rk×p

- If X is a complex random variable, then for t ∈ C [5]

- If X is a k-dimensional complex random vector, then for t ∈ Ck [6]

- If X(s) is a stochastic process, then for all functions t(s) such that the integral ∫Rt(s)X(s)ds converges for almost all realizations of X [7]

Here T denotes matrix transpose, tr(·) — the matrix trace operator, Re(·) is the real part of a complex number, z denotes complex conjugate, and * is conjugate transpose (that is z* = zT ).

Examples

Distribution Characteristic function φ(t) Degenerate δa

Bernoulli Bern(p)

Binomial B(n, p)

Negative binomial NB(r, p)

Poisson Pois(λ)

Uniform U(a, b)

Laplace L(μ, b)

Normal N(μ, σ2)

Chi-square χ2k

Cauchy Cauchy(μ, θ)

Gamma Γ(k, θ)

Exponential Exp(λ)

Multivariate normal N(μ, Σ)

Oberhettinger (1973) provides extensive tables of characteristic functions.

Properties

- The characteristic function of a random variable always exists, since it is an integral of a bounded continuous function over a space whose measure is finite.

- A characteristic function is uniformly continuous on the entire space

- It is non-vanishing in a region around zero: φ(0) = 1.

- It is bounded: | φ(t) | ≤ 1.

- It is Hermitian: φ(−t) = φ(t). In particular, the characteristic function of a symmetric (around the origin) random variable is real-valued and even.

- There is a bijection between distribution functions and characteristic functions. That is, for any two random variables X1, X2

- If a random variable X has moments up to k-th order, then the characteristic function φX is k times continuously differentiable on the entire real line. In this case

- If a characteristic function φX has a k-th derivative at zero, then the random variable X has all moments up to k if k is even, but only up to k – 1 if k is odd.[8]

- If X1, …, Xn are independent random variables, and a1, …, an are some constants, then the characteristic function of the linear combination of Xi's is

One specific case would be the sum of two independent random variables X1 and X2 in which case one would have

.

.- The tail behavior of the characteristic function determines the smoothness of the corresponding density function.

Continuity

The bijection stated above between probability distributions and characteristic functions is continuous. That is, whenever a sequence of distribution functions { Fj(x) } converges (weakly) to some distribution F(x), the corresponding sequence of characteristic functions { φj(t) } will also converge, and the limit φ(t) will correspond to the characteristic function of law F. More formally, this is stated as

- Lévy’s continuity theorem: A sequence { Xj } of n-variate random variables converges in distribution to random variable X if and only if the sequence { φXj } converges pointwise to a function φ which is continuous at the origin. Then φ is the characteristic function of X.[9]

This theorem is frequently used to prove the law of large numbers, and the central limit theorem.

Inversion formulas

Since there is a one-to-one correspondence between cumulative distribution functions and characteristic functions, it is always possible to find one of these functions if we know the other one. The formula in definition of characteristic function allows us to compute φ when we know the distribution function F (or density ƒ). If, on the other hand, we know the characteristic function φ and want to find the corresponding distribution function, then one of the following inversion theorems can be used.

Theorem. If characteristic function φX is integrable, then FX is absolutely continuous, and therefore X has the probability density function given by

when X is scalar;

when X is scalar;

in multivariate case the pdf is understood as the Radon–Nikodym derivative of the distribution μX with respect to the Lebesgue measure λ:

Theorem (Lévy).[10] If φX is characteristic function of distribution function FX, two points a<b are such that {x|a < x < b} is a continuity set of μX (in the univariate case this condition is equivalent to continuity of FX at points a and b), then

if X is scalar

if X is scalar , if X is a vector random variable.

, if X is a vector random variable.

Theorem. If a is (possibly) an atom of X (in the univariate case this means a point of discontinuity of FX) then

, when X is a scalar random variable

, when X is a scalar random variable , when X is a vector random variable.

, when X is a vector random variable.

Theorem (Gil-Pelaez).[11] For a univariate random variable X, if x is a continuity point of FX then

Inversion formula for multivariate distributions are available.[12]

Criteria for characteristic functions

It is well-known that any non-decreasing càdlàg function F with limits F(−∞) = 0, F(+∞) = 1 corresponds to a cumulative distribution function of some random variable.

There is also interest in finding similar simple criteria for when a given function φ could be the characteristic function of some random variable. The central result here is Bochner’s theorem, although its usefulness is limited because the main condition of the theorem, non-negative definiteness, is very hard to verify. Other theorems also exist, such as Khinchine’s, Mathias’s, or Cramér’s, although their application is just as difficult. Pólya’s theorem, on the other hand, provides a very simple convexity condition which is sufficient but not necessary. Characteristic functions which satisfy this condition are called Pólya-type.[13]

- Bochner’s theorem. An arbitrary function

is the characteristic function of some random variable if and only if φ is positive definite, continuous at the origin, and if φ(0) = 1.

is the characteristic function of some random variable if and only if φ is positive definite, continuous at the origin, and if φ(0) = 1. - Khinchine’s criterion. An absolutely continuous complex-valued function φ equal to 1 at the origin is a characteristic function if and only if it admits the representation

- Mathias’ theorem. A real, even, continuous, absolutely integrable function φ equal to 1 at the origin is a characteristic function if and only if

-

Pólya’s theorem. If φ is a real-valued continuous function which satisfies the conditions

then φ(t) is the characteristic function of an absolutely continuous symmetric distribution.

- A convex linear combination

(with

(with  ) of a finite or a countable number of characteristic functions is also a characteristic function.

) of a finite or a countable number of characteristic functions is also a characteristic function. - The product

of a finite number of characteristic functions is also a characteristic function. The same holds for an infinite product provided that it converges to a function continuous at the origin.

of a finite number of characteristic functions is also a characteristic function. The same holds for an infinite product provided that it converges to a function continuous at the origin. - If φ is a characteristic function and α is a real number, then φ, Re[φ], |φ|2, and φ(αt) are also characteristic functions.

Uses

Because of the continuity theorem, characteristic functions are used in the most frequently seen proof of the central limit theorem. The main trick involved in making calculations with a characteristic function is recognizing the function as the characteristic function of a particular distribution.

Basic manipulations of distributions

Characteristic functions are particularly useful for dealing with linear functions of independent random variables. For example, if X1, X2, ..., Xn is a sequence of independent (and not necessarily identically distributed) random variables, and

where the ai are constants, then the characteristic function for Sn is given by

In particular, φX+Y(t) = φX(t)φY(t). To see this, write out the definition of characteristic function:

Observe that the independence of X and Y is required to establish the equality of the third and fourth expressions.

Another special case of interest is when ai = 1/n and then Sn is the sample mean. In this case, writing X for the mean,

Moments

Characteristic functions can also be used to find moments of a random variable. Provided that the nth moment exists, characteristic function can be differentiated n times and

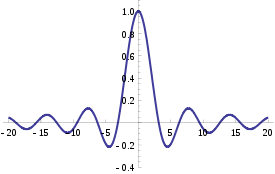

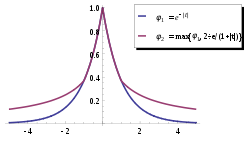

For example, suppose X has a standard Cauchy distribution. Then φX(t) = e−|t|. See how this is not differentiable at t = 0, showing that the Cauchy distribution has no expectation. Also see that the characteristic function of the sample mean X of n independent observations has characteristic function φX(t) = (e−|t|/n)n = e−|t|, using the result from the previous section. This is the characteristic function of the standard Cauchy distribution: thus, the sample mean has the same distribution as the population itself.

The logarithm of a characteristic function is a cumulant generating function, which is useful for finding cumulants; note that some instead define the cumulant generating function as the logarithm of the moment-generating function, and call the logarithm of the characteristic function the second cumulant generating function.

Data analysis

Characteristic functions can be used as part of procedures for fitting probability distributions to samples of data. Cases where this provides a practicable option compared to other possibilities include fitting the stable distribution since closed form expressions for the density are not available which makes implementation of maximum likelihood estimation difficult. Estimation procedures are available which match the theoretical characteristic function to the empirical characteristic function, calculated from the data. Paulson et al. (1975) and Heathcote (1977) provide some theoretical background for such an estimation procedure. In addition, Yu (2004) describes applications of empirical characteristic functions to fit time series models where likelihood procedures are impractical.

Example

The Gamma distribution with scale parameter θ and a shape parameter k has the characteristic function

Now suppose that we have

with X and Y independent from each other, and we wish to know what the distribution of X + Y is. The characteristic functions are

which by independence and the basic properties of characteristic function leads to

This is the characteristic function of the gamma distribution scale parameter θ and shape parameter k1 + k2, and we therefore conclude

The result can be expanded to n independent gamma distributed random variables with the same scale parameter and we get

Entire characteristic functions

As defined above, the argument of the characteristic function is treated as a real number: however, certain aspects of the theory of characteristic functions are advanced by extending the definition into the complex plane by analytical continuation, in cases where this is possible.[14]

Related concepts

Related concepts include the moment-generating function and the probability-generating function. The characteristic function exists for all probability distributions. However this is not the case for moment generating function.

The characteristic function is closely related to the Fourier transform: the characteristic function of a probability density function p(x) is the complex conjugate of the continuous Fourier transform of p(x) (according to the usual convention; see continuous Fourier transform – other conventions).

where P(t) denotes the continuous Fourier transform of the probability density function p(x). Likewise, p(x) may be recovered from φX(t) through the inverse Fourier transform:

Indeed, even when the random variable does not have a density, the characteristic function may be seen as the Fourier transform of the measure corresponding to the random variable.

See also

- Subindependence, a weaker condition than independence, that is defined in terms of characteristic functions.

Notes

- ^ Lukacs (1970) p. 196

- ^ Billingsley (1995)

- ^ Pinsky (2002)

- ^ Bochner (1955)

- ^ Andersen et al. (1995, Definition 1.10)

- ^ Andersen et al. (1995, Definition 1.20)

- ^ Sobczyk (2001)

- ^ Lukacs (1970), Corollary 1 to Theorem 2.3.1

- ^ Cuppens (1975, Theorem 2.6.9)

- ^ Named after the French mathematician Paul Pierre Lévy

- ^ Wendel, J.G. (1961)

- ^ Shephard (1991a,b)

- ^ Lukacs (1970), p.84

- ^ Lukacs (1970, Chapter 7)

References

- Andersen, H.H., M. Højbjerre, D. Sørensen, P.S. Eriksen (1995). Linear and graphical models for the multivariate complex normal distribution. Lecture notes in statistics 101. New York: Springer-Verlag. ISBN 0-387-94521-0.

- Billingsley, Patrick (1995). Probability and measure (3rd ed.). John Wiley & Sons. ISBN 0-471-00710-2.

- Bisgaard, T. M.; Z. Sasvári (2000). Characteristic functions and moment sequences. Nova Science.

- Bochner, Salomon (1955). Harmonic analysis and the theory of probability. University of California Press.

- Cuppens, R. (1975). Decomposition of multivariate probabilities. Academic Press.

- Heathcote, C.R. (1977). "The integrated squared error estimation of parameters". Biometrika 64 (2): 255–264. doi:10.1093/biomet/64.2.255.

- Lukacs, E. (1970). Characteristic functions. London: Griffin.

- Oberhettinger, Fritz (1973). Fourier Transforms of Distributions and their Inverses: A Collection of Tables. Aciademic Press

- Paulson, A.S.; E.W. Holcomb, R.A. Leitch (1975). "The estimation of the parameters of the stable laws". Biometrika 62 (1): 163–170. doi:10.1093/biomet/62.1.163.

- Pinsky, Mark (2002). Introduction to Fourier analysis and wavelets. Brooks/Cole. ISBN 0-534-37660-6.

- Sobczyk, Kazimierz (2001). Stochastic differential equations. Kluwer Academic Publishers. ISBN 9781402003455.

- Wendel, J.G. (1961). "The non-absolute convergence of Gil-Pelaez' inversion integral". The Annals of Mathematical Statistics 32 (1): 338–339. doi:10.1214/aoms/1177705164.

- Yu, J. (2004). "Empirical characteristic function estimation and its applications". Econometrics Reviews 23 (2): 93–1223. doi:10.1081/ETC-120039605.

- Shephard, N. G. (1991a) From characteristic function to distribution function: A simple framework for the theory. Econometric Theory, 7, 519–529.

- Shephard, N. G. (1991b) Numerical integration rules for multivariate inversions. J. Statist. Comput. Simul., 39, 37–46.

Theory of probability distributions probability mass function (pmf) · probability density function (pdf) · cumulative distribution function (cdf) · quantile function moment-generating function (mgf) · characteristic function · probability-generating function (pgf) · cumulantCategories:

moment-generating function (mgf) · characteristic function · probability-generating function (pgf) · cumulantCategories:- Probability theory

- Theory of probability distributions

Wikimedia Foundation. 2010.

![\varphi_X(t) = \operatorname{E}[\,e^{itX}\,]](c/5dc9d2cda009a4dab59e876c533ca96d.png)

![\varphi_X\!:\mathbb{R}\to\mathbb{C}; \quad

\varphi_X(t) = \operatorname{E}\big[e^{itX}\big]

= \int_{-\infty}^\infty e^{itx}\,dF_X(x) \qquad

\left( = \int_{-\infty}^\infty e^{itx} f_X(x)\,dx \right)](6/b46807b159c231905c8d31bd8cf092da.png)

![\varphi_X(t) = \operatorname{E}\big[\,\exp({i\,t^T\!X})\,\big],](7/737edbb18395c657f5a62b6d25381cc7.png)

![\varphi_X(t) = \operatorname{E}\big[\,\exp({i\,\operatorname{tr}(t^T\!X)})\,\big],](2/39261db9012621dce88df51fba24bec8.png)

![\varphi_X(t) = \operatorname{E}\big[\,\exp({i\,\operatorname{Re}(\overline{t}X)})\,\big],](5/e755dca76dee826448b43ba143f3e608.png)

![\varphi_X(t) = \operatorname{E}\big[\,\exp({i\,\operatorname{Re}(t^*\!X)})\,\big],](2/432bb5d7c54d8541666cc570e8e31efa.png)

![\varphi_X(t) = \operatorname{E}\big[\, \exp({i\int_\mathbb{R} t(s)X(s)ds}) \,\big].](e/e6ec0fa1ea43f86922599577498baaf2.png)

![\operatorname{E}[X^k] = (-i)^k \varphi_X^{(k)}(0).](1/ab10dd3297c867cec777da4c7d680d99.png)

![\varphi_X^{(k)}(0) = i^k \operatorname{E}[X^k]](7/ce7f99f809f42285d8856601ddbaea99.png)

![F_X(x) = \frac{1}{2} - \frac{1}{\pi}\int_0^\infty \frac{\operatorname{Im}[e^{-itx}\varphi_X(t)]}{t}\,dt.](b/78b37dbce1abe314c6d9dd7780fbf3a9.png)

![\operatorname{E}\left(X^n\right) = i^{-n}\, \varphi_X^{(n)}(0)

= i^{-n}\, \left[\frac{d^n}{dt^n} \varphi_X(t)\right]_{t=0} \,\!](9/ec997dcef8f9e1b34d4585b3a76d5001.png)