- Deming regression

-

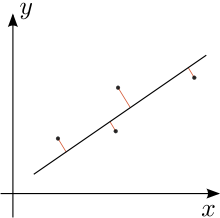

In statistics, Deming regression, named after W. Edwards Deming, is an errors-in-variables model which tries to find the line of best fit for a two-dimensional dataset. It differs from the simple linear regression in that it accounts for errors in observations on both the x- and the y- axis.

Deming regression is equivalent to the maximum likelihood estimation of an errors-in-variables model in which the errors for the two variables are assumed to be independent and normally distributed, and the ratio of their variances, denoted δ, is known.[1] In practice, this ratio might be estimated from related data-sources; however the regression procedure takes no account for possible errors in estimating this ratio.

The Deming regression is only slightly more difficult to compute compared to the simple linear regression. Many software packages used in clinical chemistry, such as Analyse-it, EP Evaluator, MedCalc and S-PLUS offer Deming regression.

The model was originally introduced by Adcock (1878) who considered the case δ = 1, and then more generally by Kummell (1879) with arbitrary δ. However their ideas remained largely unnoticed for more than 50 years, until they were revived by Koopmans (1937) and later propagated even more by Deming (1943). The latter book became so popular in clinical chemistry and related fields that the method was even dubbed Deming regression in those fields.[2]

Contents

Specification

Assume that the available data (yi, xi) are mismeasured observations of the “true” values (yi*, xi*):

where errors ε and η are independent and the ratio of their variances is assumed to be known:

In practice the variance of the x and y parameters is often unknown which complicates the estimate of δ but where the measurement method for x and y is the same they are likely to be equal so that δ = 1 for this case.

We seek to find the line of “best fit” y* = β0 + β1x*, such that the weighted sum of squared residuals of the model is minimized:[3]

Solution

Solution can be expressed in terms of the second-degree sample moments. That is, we first calculate the following quantities (all sums go from i = 1 to n):

Finally, the least-squares estimates of model's parameters will be[4]

See also

- Errors-in-variables models

- Total least squares, a generalization that allows for any number of predictors and more complicated error structure

References

- ^ (Linnet 1993)

- ^ Cornbleet, Gochman (1979)

- ^ Fuller, ch.1.3.3

- ^ Glaister (2001)

- Adcock, R. J. (1878). "A problem in least squares". The Analyst (Annals of Mathematics) 5 (2): 53–54. doi:10.2307/2635758. JSTOR 2635758.

- Cornbleet, P.J.; Gochman, N. (1979). "Incorrect Least–Squares Regression Coefficients". Clin. Chem. 25 (3): 432–438. PMID 262186.

- Deming, W. E. (1943). Statistical adjustment of data. Wiley, NY (Dover Publications edition, 1985). ISBN 0-486-64685-8.

- Fuller, Wayne A. (1987). Measurement error models. John Wiley & Sons, Inc. ISBN 0-471-86187-1.

- Glaister, P. (March 2001). "Least squares revisited". The Mathematical Gazette 85: 104-107.

- Koopmans, T. C. (1937). Linear regression analysis of economic time series. DeErven F. Bohn, Haarlem, Netherlands.

- Kummell, C. H. (1879). "Reduction of observation equations which contain more than one observed quantity". The Analyst (Annals of Mathematics) 6 (4): 97–105. doi:10.2307/2635646. JSTOR 2635646.

- Linnet, K. (1993). "Evaluation of regression procedures for method comparison studies". Clinical Chemistry 39 (3): 424–432. PMID 8448852. http://www.clinchem.org/cgi/reprint/39/3/424.

Categories:

Wikimedia Foundation. 2010.