- Concolic testing

-

Concolic testing (a portmanteau of concrete and symbolic) is a hybrid software verification technique that interleaves concrete execution (testing on particular inputs) with symbolic execution, a classical technique that treats program variables as symbolic variables. Symbolic execution is used in conjunction with an automated theorem prover or constraint solver based on constraint logic programming to generate new concrete inputs (test cases) with the aim of maximizing code coverage. Its main focus is finding bugs in real-world software, rather than demonstrating program correctness.

The first tool of this type was PathCrawler, publicly demonstrated in September 2004.[1] with a more detailed description published in April 2005.[2] Another tool, called EXE (later improved and renamed to KLEE), based on similar ideas was independently developed by Professor Dawson Engler's group at Stanford University in 2005, and published in 2005 and 2006[3] Another description and discussion of the concept of concolic testing was published in DART,[4] as implemented in the DART tool developed at Bell Labs (summer 2004) and the term concolic first occurred in "CUTE: a concolic unit testing engine for C".[5] These tools (PathCrawler, EXE, DART and CUTE) applied concolic testing to unit testing of C programs and concolic testing was originally conceived as a white box improvement upon established random testing methodologies. The technique was later generalized to testing multithreaded Java programs with jCUTE,[6] and unit testing programs from their executable codes (tool OSMOSE).[7] It was also combined with fuzz testing and extended to detect exploitable security issues in large-scale x86 binaries by Microsoft Research's SAGE.[8][9]

Contents

Example

Consider the following simple example, written in C:

-

void f(int x, int y) {

-

int z = 2*y; -

if (x == 100000) { -

if (x < z) { -

assert(0); /* error */ -

} -

} -

}

Simple random testing, trying random values of x and y, would require an impractically large number of tests to reproduce the failure.

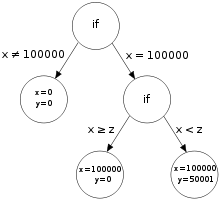

We begin with an arbitrary choice for x and y, for example x = y = 1. In the concrete execution, line 2 sets z to 2, and the test in line 3 fails since 1 ≠ 100000. Concurrently, the symbolic execution follows the same path but treats x and y as symbolic variables. It sets z to the expression 2y and notes that, because the test in line 3 failed, x ≠ 100000. This inequality is called a path condition and must be true for all executions following the same execution path as the current one.

Since we'd like the program to follow a different execution path on the next run, we take the last path condition encountered, x ≠ 100000, and negate it, giving x = 100000. An automated theorem prover is then invoked to find values for the input variables x and y given the complete set of symbolic variable values and path conditions constructed during symbolic execution. In this case, a valid response from the theorem prover might be x = 100000, y = 0.

Running the program on this input allows it to reach the inner branch on line 4, which is not taken since 100000 is not less than 2. The path conditions are x = 100000 and x ≥ z. The latter is negated, giving x < z. The theorem prover then looks for x, y satisfying x = 100000, x < z, and z = 2y; for example, x = 100000, y = 50001. This input reaches the error.

Algorithm

Essentially, a concolic testing algorithm operates as follows:

- Classify a particular set of variables as input variables. These variables will be treated as symbolic variables during symbolic execution. All other variables will be treated as concrete values.

- Instrument the program so that each operation which may affect a symbolic variable value or a path condition is logged to a trace file, as well as any error that occurs.

- Choose an arbitrary input to begin with.

- Execute the program.

- Symbolically re-execute the program on the trace, generating a set of symbolic constraints (including path conditions).

- Negate the last path condition not already negated in order to visit a new execution path. If there is no such path condition, the algorithm terminates.

- Invoke an automated theorem prover to generate a new input. If there is no input satisfying the constraints, return to step 6 to try the next execution path.

- Return to step 4.

There are a few complications to the above procedure:

- The algorithm performs a depth-first search over an implicit tree of possible execution paths. In practice programs may have very large or infinite path trees — a common example is testing data structures that have an unbounded size or length. To prevent spending too much time on one small area of the program, the search may be depth-limited (bounded).

- Symbolic execution and automated theorem provers have limitations on the classes of constraints they can represent and solve. For example, a theorem prover based on linear arithmetic will be unable to cope with the nonlinear path condition xy = 6. Any time that such constraints arise, the symbolic execution may substitute the current concrete value of one of the variables to simplify the problem. An important part of the design of a concolic testing system is selecting a symbolic representation precise enough to represent the constraints of interest.

Limitations

Concolic testing has a number of limitations:

- If the program exhibits nondeterministic behavior, it may follow a different path than the intended one. This can lead to nontermination of the search and poor coverage.

- Even in a deterministic program, a number of factors may lead to poor coverage, including imprecise symbolic representations, incomplete theorem proving, and failure to search the most fruitful portion of a large or infinite path tree.

- Programs which thoroughly mix the state of their variables, such as crytographic primitives, generate very large symbolic representations that cannot be solved in practice. For example, the condition "if (md5_hash(input) == 0xdeadbeef)" requires the theorem prover to invert MD5, which is an open problem.

Tools

- pathcrawler-online.com is a restricted version of the current PathCrawler tool which is publicly available as an online test-case server for evaluation and education purposes.

- CUTE and jCUTE are available as binaries under a research-use only license by Urbana-Champaign for C and for Java.

- CREST is an open-source solution for C comparable to CUTE (modified BSD license).

- Microsoft Pex, developed at Microsoft Rise, is publicly available as a Microsoft Visual Studio 2010 Power Tool for the NET Framework.

Many tools, notably DART and SAGE, have not been made available to the public at large. Note however that for instance SAGE is "used daily" for internal security testing at Microsoft.[10]

References

- ^ Williams, Nicky; Bruno Marre, Patricia Mouy (2004). "On-the-Fly Generation of K-Path Tests for C Functions". Proceedings of the 19th IEEE International Conference on Automated Software Engineering (ASE 2004), 20-25 September 2004, Linz, Austria. IEEE Computer Society. pp. 290–293. ISBN 0-7695-2131-2.

- ^ Williams, Nicky; Bruno Marre, Patricia Mouy, Muriel Roger (2005). "PathCrawler: Automatic Generation of Path Tests by Combining Static and Dynamic Analysis". Dependable Computing - EDCC-5, 5th European Dependable Computing Conference, Budapest, Hungary, April 20-22, 2005, Proceedings. Springer. pp. 281–292. ISBN 3-540-25723-3.

- ^ Dawson, Engler; Cristian Cadar, Vijay Ganesh, Peter Pawloski, David L. Dill and Dawson Engler (2006). "EXE: Automatically Generating Inputs of Death". Proceedings of the 13th International Conference on Computer and Communications Security (CCS 2006). Alexandria, VA, USA: ACM. http://www.stanford.edu/~engler/exe-ccs-06.pdf.

- ^ Godefroid, Patrice; Nils Klarlund, Koushik Sen (2005). "DART: Directed Automated Random Testing". Proceedings of the 2005 ACM SIGPLAN conference on Programming language design and implementation. New York, NY: ACM. pp. 213–223. ISSN 0362-1340. http://cm.bell-labs.com/who/god/public_psfiles/pldi2005.pdf. Retrieved 2009-11-9.

- ^ Sen, Koushik; Darko Marinov, Gul Agha (2005). "CUTE: a concolic unit testing engine for C". Proceedings of the 10th European software engineering conference held jointly with 13th ACM SIGSOFT international symposium on Foundations of software engineering. New York, NY: ACM. pp. 263–272. ISBN 1-59593-014-0. http://srl.cs.berkeley.edu/~ksen/papers/C159-sen.pdf. Retrieved 2009-11-9.

- ^ Sen, Koushik; Gul Agha (August 2006). "CUTE and jCUTE : Concolic Unit Testing and Explicit Path Model-Checking Tools". Computer Aided Verification: 18th International Conference, CAV 2006, Seattle, WA, USA, August 17-20, 2006, Proceedings. Springer. pp. 419–423. ISBN 978-3-540-37406-0. http://srl.cs.berkeley.edu/~ksen/pubs/paper/cuteTool.ps. Retrieved 2009-11-9.

- ^ Bardin, Sébastien; Philippe Herrmann (April 2008). "Structural Testing of Executables". Proceedings of the 1st IEEE International Conference on Software Testing, Verification, and Validation (ICST 2008), Lillehammer, Norway.. IEEE Computer Society. pp. 22–31. ISBN:978-0-7695-3127-4. http://sebastien.bardin.free.fr/icst08.pdf.,

- ^ Godefroid, Patrice; Michael Y. Levin, David Molnar (2007). Automated Whitebox Fuzz Testing (Technical report). Microsoft Research. TR-2007-58. ftp://ftp.research.microsoft.com/pub/tr/TR-2007-58.pdf.

- ^ Godefroid, Patrice (2007). "Random testing for security: blackbox vs. whitebox fuzzing". Proceedings of the 2nd international workshop on Random testing: co-located with the 22nd IEEE/ACM International Conference on Automated Software Engineering (ASE 2007). New York, NY: ACM. pp. 1. ISBN 978-1-59593-881-7. http://research.microsoft.com/en-us/um/people/pg/public_psfiles/abstract-rt2007.pdf. Retrieved 2009-11-9.

- ^ SAGE team (2009). "Microsoft PowerPoint - SAGE-in-one-slide". Microsoft Research. http://research.microsoft.com/en-us/um/people/pg/public_psfiles/sage-in-one-slide.pdf. Retrieved 2009-11-10.

Categories: -

Wikimedia Foundation. 2010.