- Cognitive infocommunications

-

Cognitive infocommunications (CogInfoCom) investigates the link between the research areas of infocommunications and cognitive sciences, as well as the various engineering applications which have emerged as the synergic combination of these sciences.

The primary goal of CogInfoCom is to provide a systematic view of how cognitive processes can co-evolve with infocommunications devices so that the capabilities of the human brain may not only be extended through these devices, irrespective of geographical distance, but may also interact with the capabilities of any artificially cognitive system. This merging and extension of cognitive capabilities is targeted towards engineering applications in which artificial and/or natural cognitive systems are enabled to work together more effectively.

We define two important dimensions of cognitive infocommunications: the mode of communication and the type of communication. The mode of communication refers to the actors at the two endpoints of communication:

- Intracognitive communication: The mode of communication is intra-cognitive when information transfer occurs between two cognitive beings with equivalent cognitive capabilities (e.g.: between two humans).

- Intercognitive communication: The mode of communication is inter-cognitive when information transfer occurs between two cognitive beings with different cognitive capabilities (e.g.: between a human and an artificially cognitive system).

The type of communication refers to the type of information that is conveyed between the two communicating entities, and the way in which it is carried out:

- Sensor-sharing communication: entities on both ends use the same sensory modality to perceive the communicated information.

- Sensor-bridging communication: sensory information obtained or experienced by each of the entities is not only transmitted, but also transformed to an appropriate and different sensory modality.

- Representation-sharing communication: sensory information obtained or experienced by each of the entities is merely transferred, hence, the same information representation is used on both ends.

- Representation-bridging communication: sensory information transferred to the receiver entity is filtered and/or adapted so that a different information representation is used on the two ends.

Remarks

- Dominant parts of a number of basic ideas behind CogInfoCom are not new in the sense that different aspects of many key points have appeared, and are being actively researched, in several existing areas of IT (e.g. affective computing, human–computer interaction, human–robot interaction, sensory substitution, sensorimotor extension, iSpace research, interactive systems engineering, ergonomics, etc.)

- CogInfoCom should not be confused with computational neuroscience or computational cognitive modeling, which can mainly be considered as a very important set of modeling tools for cognitive sciences (thus indirectly for CogInfoCom), but have no intention of directly serving engineering systems.

- A sensor-sharing application of CogInfoCom is novel in the sense that it extends traditional infocommunications by conveying any kind of signal normally perceptible through the actor’s senses to the other end of the communication line. The transferred information may describe not only the actor involved in the communication, but also the environment in which the actor is located. The key determinant of sensor-sharing communication is that the same sensory modality is used to perceive the sensory information on both ends of the infocommunications line.

- Sensor bridging can be taken to mean not only the way in which the information is conveyed (i.e., by changing sensory modality), but also the kind of information that is conveyed. Whenever the transferred information type is imperceptible to the receiving end (e.g., because its cognitive system is incompatible with the information type) the communication of information will necessarily occur through sensor bridging.

- It is clear that a sensor-sharing type of communication can be either representation-sharing or representation-bridging as well, but in the case of sensor-bridging communication, the question may arise whether it is possible for the representation of information to be the same across different modalities. We remark that future research may provide a rationale to equate represenations even across modalities.

The first draft definition of CogInfoCom was given in [1]. The above definition was finalized based on the paper with the joint participation of the Startup Committee and the participants of the 1st International Workshop on Cognitive Infocommunications, held in Tokyo, Japan in 2010.

Contents

Research Background of Cognitive Infocommunications

The term cognitive infocommunication was used in several papers before the definition was created (e.g., [2], [3], [4]). The definition was created based on the research described in these papers, and was also motivated by work in Intelligent Space [18], [24]. The 1st International Workshop on Cognitive Infocommunications was held in Tokyo, Japan in 2010.

The idea that the information systems we use need to be accommodated with artificially cognitive functionalities has culminated in the creation of cognitive informatics [5], [6]. With the strong support of cognitive science, results in cognitive informatics are contributing to the creation of more and more sophisticated artificially cognitive engineering systems. Given this trend, it is rapidly becoming clear that the amount of cognitive content handled by our engineering systems is reaching a point where the communication forms necessary to enable interaction with this content are becoming more and more complex [7], [8], [9], [10], [11].

The inspiration to create engineering systems capable of communicating with users in natural ways is not new. It is one of the primary goals of affective computing to endow information systems with emotion, and to enable them to communicate these emotions in ways that resonate with the human users [12], [13]. Augmented cognition deals with modeling human attention and using results to create engineering systems which are capable of adapting based on human cognitive skills and momentary attention [14]. In addition, there are a host of research fields which concentrate less on modeling (human) psychological emotion, but aim to allow users to have a more tractable and pleasurable interaction with machines (e.g., human computer interaction, human robot interaction, interactive systems engineering) [15], [16]. Further fields specialize in the communication of hidden parameters to remote environments (e.g., sensory substitution and sensorimotor extension in engineering applications, iSpace research, multimodal interaction) [17], [18], [19], [20], [21], [22], [23].

In recent years, several applications have appeared in the technical literature which combine various aspects of the previously mentioned fields, but also extend them in significant ways (e.g., [24], [25], [26], [27], [28], [29], [30], [23], [31], [32], [18], [33]). However, in these works, the fact that a new research field is emerging is only implicitly mentioned.

Discussion

The research areas treated within CogInfoCom from three different points of view: the research historical view, the virtual reality point of view and the cognitive informatics view.

Historical view

Traditionally, the research fields of informatics, media, and communications were very different areas, treated by researchers from significantly different backgrounds. As a synthesis between pairs of these 3 disciplines, the fields of infocommunications, media informatics and media communications emerged in the latter half of the 20th century. The past evolution of these disciplines points towards their convergence in the near future, given that modern network services aim to provide a more holistic user experience, which presupposes achievements from these different fields [34], [35]. In place of these research areas, with the enormous growth in scope and generality of cognitive sciences in the past few decades, the new fields of cognitive media [36], [37], cognitive informatics and cognitive communications [38],[39] are gradually emerging. In a way analogous to the evolution of infocommunications, media informatics and media communications, we are seeing more and more examples of research achievements which can be categorized as results in cognitive infocommunications, cognitive media informatics and cognitive media communications, even if – as of yet – these fields are not always clearly defined [40], [41], [24], [25], [29], [30], [42], [18], [43], [36].

The primary goal of CogInfoCom is to use information theoretical methods to synthesize research results in some of these areas, while aiming primarily to make use of these synthesized results in the design of engineering systems. It is novel in the sense that it views both the medium used for communication and the media which is communicated as entities which are interpreted by a cognitive system.

CogInfoCom from a Virtual Reality Perspective

In everyday human-machine interaction, both the human and the machine are located in the same physical space and thus the human can rely on his / her natural cognitive system to communicate with the machine. In contrast, when 3D virtualization comes into play, the human is no longer in direct connection with the machine; instead, he / she must communicate with its virtual representation. Thus, the problem of human-machine interaction is transformed into a problem of human-virtual machine interaction. In such scenarios, the natural communication capabilities of the human become limited due to the restricted interface provided by the virtual representation of the machine (for instance, while the senses of vision and audition still receive considerable amount of information, the tactile and olfactory senses are almost completely restricted in virtual environments, i.e. it is usually not possible to touch or smell the virtual representation of a machine). For this reason, it becomes necessary to develop a virtual cognitive system which can extend the natural one so as to allow the human to effectively communicate with the virtual representation, which essentially becomes an infocommunication system in this extended scenario. One of the primary goals of cognitive infocommunications is to address this problem so that sensory modalities other than the ones normally used in virtual reality research can be employed for the perception of a large variety of information on the virtualized system.

Cognitive Informatics View

Cognitive informatics (CI) is a research field which was created in the early 21st century, and which pioneered the adoption of research results in cognitive sciences within information technology [5], [6]. The main purpose of CI is to investigate the internal information storing and processing mechanisms in natural intelligent systems such as the human brain.Much like CogInfoCom, CI also aims primarily to create numerically tractable models which are well grounded from an information theoretical point of view, and are amenable to engineering systems. The key difference between CI and CogInfoCom is that while the results of CI largely converge towards and support the creation of artificial cognitive systems, the goal of CogInfoCom is to enable these systems to communicate with each other and their users efficiently.

Thus, CogInfoCom builds on a large part of results in CI, since it deals with the communication space between the human cognitive system and other natural or artificial cognitive systems.

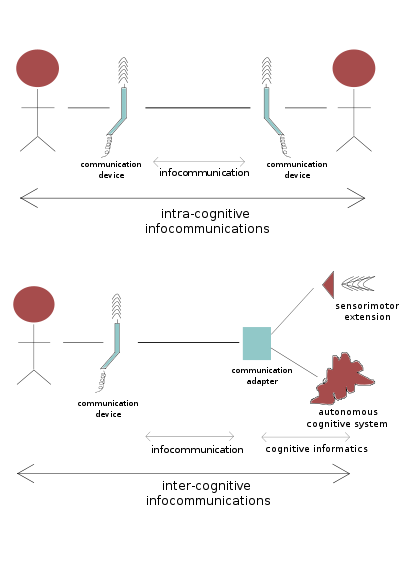

Cognitive informatics view of CogInfoCom. The figure on the top shows a case of intra-cognitive infocommunication, and demonstrates that while traditional tele-com deals with the distance-bridging transfer of raw data (not interpreted by any cognitive system), cognitive infocommunications deals with the endpoint-to-endpoint communication of information. The figure on the bottom shows a case of inter-cognitive infocommunication, when two cognitive systems with different cognitive capabilities are communicating with each other. In this case, autonomous cognitive systems as well as remote sensors (sensorimotor extensions, as described in the definition of the sensorbridging communication type) require the use of a communication adapter, while biological cognitive systems use traditional telecommunications devices.

Cognitive informatics view of CogInfoCom. The figure on the top shows a case of intra-cognitive infocommunication, and demonstrates that while traditional tele-com deals with the distance-bridging transfer of raw data (not interpreted by any cognitive system), cognitive infocommunications deals with the endpoint-to-endpoint communication of information. The figure on the bottom shows a case of inter-cognitive infocommunication, when two cognitive systems with different cognitive capabilities are communicating with each other. In this case, autonomous cognitive systems as well as remote sensors (sensorimotor extensions, as described in the definition of the sensorbridging communication type) require the use of a communication adapter, while biological cognitive systems use traditional telecommunications devices.

Examples

Examples are categorized into 4 categories based on all possible combinations of the modes of communication and the two types of communication pertaining to the sensory modalities used during communication.

Intra-cognitive sensor-sharing communication

An example of intra-cognitive sensor-sharing communication is when two humans communicate through Skype or some other telecommunication system, and a large variety of information types (e.g. metalinguistic information and background noises through sound, gesture-based metacommunication through video, etc.) are communicated to both ends of the line. In more futuristic applications, information from other sensory modalities (e.g. smells through the use of electronic noses and scent generators, tastes using equipment not yet available today) may also be communicated. Because the communicating actors are both human, the communication mode is intra-cognitive, and because the communicated information is shared using the same cognitive subsystems (i.e., the same sensory modalities) on both ends of the line, the type of communication is sensor-sharing. The communication of such information is significant not only because the users can feel physically closer to each other, but also because the sensory information obtained at each end of the line (i.e., information which describes not the actor, but the environment of the actor) is shared with the other end (in such cases, the communication is intra-cognitive, despite the fact that the transferred information describes the environment of the actor, because the environment is treated as a factor which has an implicit, but direct effect on the actor).

Intra-cognitive sensor-bridging communication

An example of intra-cognitive sensor-bridging communication is when two humans communicate through Skype or some other telecommunication system, and each actor’s pulse is transferred to the other actor using a visual representation consisting of a blinking red dot, or the breath rate of each actor is transferred to the other actor using a visual representation which consists of a discoloration of the screen. The frequency of the discoloration could symbolize the rate of breathing, and the extent of discoloration might symbolize the amount of air inhaled each time. (Similar ideas are investigated in, e.g. [31], [23]). Because the communicating actors are both human, the communication mode is intra-cognitive. Because the sensory modality used to perceive the information (visual system) is different from the modality used to normally perceive the information (it is questionable if such a modality even exists, because people don’t usually feel the pulse or breath rate of other people during normal conversation), we[who?] say that the communication is sensor-bridging. The communication of such parameters is significant in that they help further describe the psychological state of the actors. Due to the fact that such parameters are directly imperceptible even in face-to-face communication, the only possibility is to convey them through sensor bridging. In general, the transferred information is considered cognitive because the psychological state of the actors does not depend on this information in a definitive way, but when interpreted by a cognitive system such as a human actor, the information and its context together can help create a deeper understanding of the psychological state of the remote user.

Inter-cognitive sensor-sharing communication

An example of inter-cognitive sensor-sharing communication might include the transfer of the operating sound of a robot actor, as well as a variety of background scents (using electronic noses and scent generators) to a human actor controlling the robot from a remote teleoperation room. The operating sound of a robot actor can help the teleoperator gain a good sense of the amount of load the robot is dealing with, how much resistance it is encountering during its operation, etc. Further, the ability to perceive smells from the robot’s surroundings can augment the teleoperator’s perception of possible hazards in the robot’s environment. A further example of inter-cognitive sensor sharing would be the transfer of direct force feedback through e.g. a joystick. The communication in these examples is inter-cognitive because the robot’s cognitive system is significantly different from the human teleoperator’s cognitive system. Because the transferred information is conveyed directly to the same sensory modality, the communication is also sensor-sharing. Similar to the case of intracognitive sensor-sharing, the transfer of such information is significant because it helps further describe the environment in which the remote cognitive system is operating, which has an implicit effect on the remote cognitive system.

Inter-cognitive sensor-bridging communication

As the information systems, artificial cognitive systems and the virtual manifestations of these systems (which are gaining wide acceptance in today’s engineering systems, e.g. as in iSpace [18]) are becoming more and more sophisticated, the operation of these systems and the way in which they organize complex information are, by their nature, essentially inaccessible in many cases to the human perceptual system and the information representation it uses. For this reason, intercognitive sensor bridging is perhaps the most complex area of CogInfoCom, because it relies on a sophisticated combination of a number of fields from information engineering and infocommunications to the various cognitive sciences.

A rudimentary example of inter-cognitive sensor-bridging communication that is already in wide use today is the collision-detection system available in many cars which plays a frequency modulated signal, the frequency of which depends on the distance between the car and the (otherwise completely visible) car behind it. In this case, auditory signals are used to convey spatial (visual) information. A further example could be the use of the vibrotactile system to provide force feedback through axial vibrations (this is a commonly adopted approach in various applications, from remote vehicle guidance to telesurgery, e.g. [44], [45], [46], [47], [48]). Force feedback through axial vibration is also very widespread in gaming, because with practice, the players can easily adapt to the signals and will really interpret them as if they corresponded to a real collision with an object or someone else’s body [49], [50]. It is important to note, however, that the use of vibrations is no longer limited to the transfer of information on collisions or other simple events, but is also used to communicate more complex information, such as warning signals to alert the user’s attention to events whose occurrence is deduced from a combination of events with a more complex structure (e.g., vibrations of smart phones to alert the user of suspicious account activity, etc.). Such event-detection systems can be powerful when combined with iSpace [28].

Finally, more complex examples of sensor bridging in inter-cognitive communication might include the use of electrotactile arrays placed on the tongue to convey visual information received from a camera placed on the forehead (as in [21]), or the transfer of a robot actor’s tactile percepts (as detected by e.g. a laser profilometer) using abstract sounds on the other end of the communication line. In [51], relatively short audio signals (i.e., 2–3 seconds long) are used to convey abstract tactile dimensions such as the softness, roughness, stickiness and temperature of surfaces. The necessity of haptic feedback in virtual environments cannot be underrated [52].

The type of information conveyed through sensor bridging, the extent to which this information is abstract and the sensory modality to which it is conveyed is open to research. As researchers obtain closer estimates to the number of dimensions each sensory modality is sensitive to, and the resolution and speed of each modality’s information processing capabilities, research and development in sensory substitution will surely provide tremendous improvements to today’s engineering systems.

Complex example

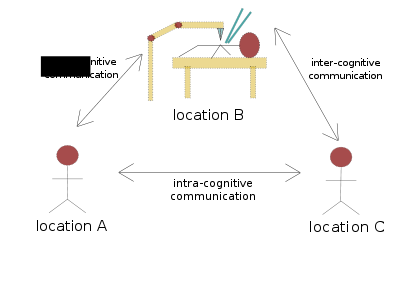

Let us consider a scenario where a telesurgeon in location A is communicating with a telesurgical robot in remote location B, and another telesurgeon in remote location C. At the same time, let us imagine that the other telesurgeon (in location C) is communicating with a different telesurgical robot, also in remote location B (in much the same way as a surgical assistant would perform a different task on the same patient), and the first telesurgeon in location A. In this case, both teleoperators are involved in one channel of inter-cognitive and one channel of intra-cognitive communication. Within these two modes, examples of sensor sharing and sensor bridging might occur at the same time. Each telesurgeon may see a camera view of the robot they are controlling, feel the limits of their motions through direct force feedback, and hear the soft, but whining sound of the operating robot through direct sound transfer. These are all examples of sensor-sharing intercognitive communication. The transmission of the operated patient’s blood pressure and heart rate are also examples of sensor-sharing inter-cognitive communication (they are intercognitive, because the transmission of information is effected through the communication links with the robot, and they are sensor-sharing, because they are presented in the same graphical form in which blood pressure and heart rhythm information are normally displayed). At the same time, information from various sensors on the telesurgical robot might be transmitted to a different sensory modality of the teleoperator (e.g., information from moisture sensors using pressure applied to the arm, etc.), which would serve to augment the telesurgeon’s cognitive awareness of the remote environment, and can be considered as sensor-bridging communication resulting in an augmented form of telepresence. Through the intracognitive mode of communication, the two teleoperators can obtain information on each other’s psychological state and environment. Here we can also imagine both distance and sensor-bridging types of communication, all of which can directly or indirectly help raise each telesurgeon’s attention to possible problems or abnormalities the other telesurgeon is experiencing.

References

[1] P. Baranyi and A. Csapo, “Cognitive Infocommunications: CogInfoCom”, 11th IEEE International Symposium on Computational Intelligence and Informatics, Budapest, Hungary, 2010.

[2] P. Baranyi, B. Solvang, H. Hashimoto, and P. Korondi, “3d internet for cognitive info–communication” in 10th International Symposium of Hungarian Researchers on Computational Intelligence and Informatics (CINTI ’09, Budapest), 2009, pp. 229–243.

[3] G. Soros, B. Resko, B. Solvang, P. Baranyi, “A cognitive robot supervision system”, 7th International Symposium on Applied Machine Intelligence and Informatics, pp. 51–55, Herlany, Slovakia, 2009.

[4] A. Vamos, I. Fulop, B. Resko, P. Baranyi, “Collaboration in virtual reality of intelligent agents”, Acta Electrotechnica et Informatica, Vol. 10, No. 2, pp. 21–27, 2010.

[5] Y. Wang and W. Kinsner, “Recent advances in cognitive informatics,” IEEE Transactions on Systems, Man and Cybernetics, vol. 36, no. 2, pp. 121–123, 2006.

[6] Y. Wang, “On cognitive informatics (keynote speech),” in 1st IEEE International Conference on Cognitive Informatics, 2002, pp. 34–42.

[7] F. Davide, M. Lunghi, G. Riva, and F. Vatalaro, “Communications through virtual technologies,” in IDENTITY, COMMUNITY AND TECHNOLOGY IN THE COMMUNICATION AGE, IOS PRESS. Springer-Verlag, 2001, pp. 124–154.

[8] W. IJsselsteijn and G. Riva, “Being there: The experience of presence in mediated environments,” 2003.

[9] S. Benford, C. Greenhalgh, G. Reynard, C. Brown, and B. Koleva, “Understanding and constructing shared spaces with mixed reality boundaries steve,” 1998.

[10] B. Shneiderman, “Direct manipulation for comprehensible, predictable and controllable user interfaces,” in Proceedings of IUI97, 1997 International Conference on Intelligent User Interfaces. ACM Press, 1997, pp. 33–39.

[11] D. Kieras and P. G. Polson, “An approach to the formal analysis of user complexity,” International Journal of Man-Machine Studies, vol. 22, no. 4, pp. 365 – 394, 1985.

[12] R. Picard, Affective Computing. The MIT Press, 1997.

[13] J. Tao and T. Tan, “Affective computing: A review,” in Affective Computing and Intelligent Interaction, ser. Lecture Notes in Computer Science, J. Tao, T. Tan, and R. Picard, Eds. Springer Berlin / Heidelberg, 2005, vol. 3784, pp. 981–995.

[14] D. Schmorrow (editor), "Foundations of Augmented Cognition", Lawrence Erlbaum Associates, 2005.

[15] R. Baecker, J. Grudin, W. Buxton, and S. Greenberg, Readings in Human-Computer Interaction. Toward the Year 2000. Morgan Kaufmann, San Francisco, 1995.

[16] C. Bartneck and M. Okada, “Robotic user interfaces,” in Human and Computer Converence (HC’01), Aizu, Japan, 2001, pp. 130–140.

[17] M. Auvray and E. Myin, “Perception with compensatory devices -- from sensory substitution to sensorimotor extension,” Cognitive Science, vol. 33, pp. 1036–1058, 2009.

[18] P. Korondi and H. Hashimoto, “Intelligent space, as an integrated intelligent system (keynote paper), high tatras, slovakia,” in International Conferece on Electrical Drives and Power Electronics, 2003, pp. 24–31.

[19] P. Korondi, B. Solvang, and P. Baranyi, “Cognitive robotics and telemanipulation,” in 15th International Conference on Electrical Drives and Power Electronics, Dubrovnik, Croatia, 2009, pp. 1–8.

[20] P. Bach-y Rita, “Tactile sensory substitution studies,” Annals of New York Academic Sciences, vol. 1013, pp. 83–91, 2004.

[21] P. Bach-y Rita, K. Kaczmarek, M. Tyler, and J. Garcia-Lara, “Form perception with a 49-point electrotactile stimulus array on the tongue,” Journal of Rehabilitation Research Development, vol. 35, pp. 427–430, 1998.

[22] P. Arno, A. Vanlierde, E. Streel, M.-C. Wanet-Defalque, S. Sanabria-Bohorquez, and C. Veraart, “Auditory substitution of vision: pattern recognition by the blind,” Applied Cognitive Psychology, vol. 15, no. 5, pp. 509–519, 2001.

[23] L. Mignonneau and C. Sommerer, “Designing emotional, metaphoric, natural and intuitive interfaces for interactive art, edutainment and mobile communications,” Computers & Graphics, vol. 29, no. 6, pp. 837 – 851, 2005.

[24] M. Niitsuma and H. Hashimoto, “Extraction of space-human activity association for design of intelligent environment,” in IEEE International Conference on Robotics and Automation, 2007, pp. 1814–1819.

[25] N. Campbell, “Conversational speech synthesis and the need for some laughter,” IEEE Transactions on Audio, Speech and Language Processing, vol. 14, no. 4, pp. 1171–1178, 2006.

[26] A. Luneski, R. Moore, and P. Bamidis, “Affective computing and collaborative networks: Towards emotion-aware interaction,” in Pervasive Collaborative Networks, L. Camarinha-Matos and W. Picard, Eds. Springer Boston, 2008, vol. 283, pp. 315–322.

[27] L. Boves, L. ten Bosch, and R. Moore, “Acorns - towards computational modeling of communication and recognition skills,” in 6th IEEE International Conference on Cognitive Informatics, 2007, pp. 349–356.

[28] P. Podrzaj and H. Hashimoto, “Intelligent space as a fire detection system,” in Systems, Man and Cybernetics, 2006. SMC ’06. IEEE International Conference on, vol. 3, oct. 2006, pp. 2240–2244.

[29] O. Lopez-Ortega and V. Lopez-Morales, “Cognitive communication in a multiagent system for distributed process planning,” International Journal of Computer Applications in Technology, vol. 26, no. 1/2, pp. 99–107, 2006.

[30] N. Suzuki and C. Bartneck, “Editorial: special issue on subtle expressivity for characters and robots,” International Journal of Human-Computer Studies, vol. 62, no. 2, pp. 159–160, 2005.

[31] C. Sommerer and L. Mignonneau, “Mobile feelings - wireless communication of heartbeat and breath for mobile art,” in 14th International Conference on Artificial Reality and Teleexistence, Seoul, South Korea (ICAT ’04), 2004, pp. 346–349.

[32] Y. Wilks, R. Catizone, S. Worgan, A. Dingli, R. Moore, and W. Cheng, “A prototype for a conversational companion for reminiscing about images,” Computer Speech & Language, vol. In Press, Corrected Proof, pp. –, 2010.

[33] K. Morioka, J.-H. Lee, and H. Hashimoto, “Human following mobile robot in a distributed intelligent sensor network,” IEEE Transactions on Industrial Electronics, vol. 51, no. 1, pp. 229–237, 2004.

[34] B. Preissl and J. Muller, Governance of Communication Networks: Connecting Societies and Markets with IT. Physica-Verlag HD, 1979.

[35] G. Sallai, “Converging information, communication and media technologies,” in Assessing Societal Implications of Converging Technological Development, G. Banse, Ed. Edition Sigma, Berlin, 2007, pp. 25–43.

[36] M. M. Recker, A. Ram, T. Shikano, G. Li, and J. Stasko, “Cognitive media types for multimedia information access,” Journal of Educational Multimedia and Hypermedia, vol. 4, no. 2–3, pp. 183–210, 1995.

[37] R. Kozma, “Learning with media,” Review of Educational Research, vol. 61, no. 2, pp. 179–212, 1991.

[38] J. Roschelle, “Designing for cognitive communication: epistemic fidelity or mediating collaborative inquiry?” in Computers, communication and mental models. Taylor & Francis, 1996, pp. 15–27.

[39] D. Hewes, The Cognitive Bases of Interpersonal Communication. Routledge, 1995.

[40] P. Baranyi, B. Solvang, H. Hashimoto, and P. Korondi, “3d internet for cognitive info–communication,” in 10th International Symposium of Hungarian Researchers on Computational Intelligence and Informatics (CINTI ’09, Budapest), 2009, pp. 229–243.

[41] H. Jenkins, Convergence Culture: Where Old and New Media Collide. NYU Press, 2008.

[42] P. Thompson, G. Cybenko, and A. Giani, “Cognitive hacking,” in Economics of Information Security, ser. Advances in Information Security, S. Jajodia, L. Camp, and S. Lewis, Eds. Springer US, 2004, vol. 12, pp. 255–287.

[43] J. Cheesebro and D. Bertelsen, Analyzing Media: Communication Technologies as Symbolic and Cognitive Systems. The Guilford Press, 1998.

[44] S. Cho, H. Jin, J. Lee, and B. Yao, “Teleoperation of a mobile robot using a force-reflection joystick with sensing mechanism of rotating magnetic field,” Mechatronics, IEEE/ASME Transactions on, vol. 15, no. 1, pp. 17 –26, feb. 2010.

[45] N. Gurari, K. Smith, M. Madhav, and A. Okamura, “Environment discrimination with vibration feedback to the foot, arm, and fingertip,” in Rehabilitation Robotics, 2009. ICORR 2009. IEEE International Conference on, 2009, pp. 343 –348.

[46] H. Xin, C. Burns, and J. Zelek, “Non-situated vibrotactile force feedback and laparoscopy performance,” in Haptic Audio Visual Environments and their Applications, 2006. HAVE 2006. IEEE International Workshop on, 2006, pp. 27 –32.

[47] R. Schoonmaker and C. Cao, “Vibrotactile force feedback system for minimally invasive surgical procedures,” in Systems, Man and Cybernetics, 2006. SMC ’06. IEEE International Conference on, vol. 3, 2006, pp. 2464–2469.

[48] A. Okamura, J. Dennerlein, and R. Howe, “Vibration feedback models for virtual environments,” in Robotics and Automation, 1998. Proceedings. 1998 IEEE International Conference on, vol. 1, may. 1998, pp. 674 –679 vol.1.

[49] K. Minamizawa, S. Fukamachi, H. Kajimoto, N. Kawakami, and S. Tachi, “Wearable haptic display to present virtual mass sensation,” in SIGGRAPH ’07: ACM SIGGRAPH 2007 sketches. New York, NY, USA: ACM, 2007, p. 43.

[50] S.-H. Choi, H.-D. Chang, and K.-S. Kim, “Development of forcefeedback device for pc-game using vibration,” in ACE ’04: Proceedings of the 2004 ACM SIGCHI International Conference on Advances in computer entertainment technology. ACM, 2004, pp. 325–330.

[51] A. Csapo and P. Baranyi, “An interaction-based model for auditory subsitution of tactile percepts,” in IEEE International Conference on Intelligent Engineering Systems, INES, 2010, p. (In press.).

[52] G. Robles-De-La-Torre, “The importance of the sense of touch in virtual and real environments,” Multimedia, IEEE, vol. 13, no. 3, pp. 24 –30, 2006.

Wikimedia Foundation. 2010.