- Computational photography (artistic)

-

See computational photography for a new article on the topic of computational photography.

Computational photography refers to computer image processing in which the image acquisition is influenced by the desire to process multiple pictures of the same subject matter, into an aggregate image or image sequence.

Computational photography is also known in the literature as "Intelligent Image Processing".

Contents

Relation to digital imaging

Digital photography refers to a quantized (finite) word length, i.e. to pixel quantities that are usually integers. Conversely, what is expected of computational photography is pixel quantities that are floating point numbers in acquisition, processing, storage, and display. This is done through file formats that represent floating point values quantimetrically, i.e. on some known (to within a constant scale factor) tone scale. Examples of quantimetric scales include, but are not limited to the following:

- Linear in the quantity of light received, up to a single unknown multiplicative constant for the entire image;

- Logarithmic in the quantity of light received, up to a single unknown additive constant for the entire image.

A recent paper, entitled "Being Undigital" attempted to define the essence of computational photography as an "undigital" capture of images. Ideally, computational photography captures, as best as possible, an array of real numbers rather than integers, while maintaining the ability to store, wirelessly send, process, and display the image on a continuous tone scale.

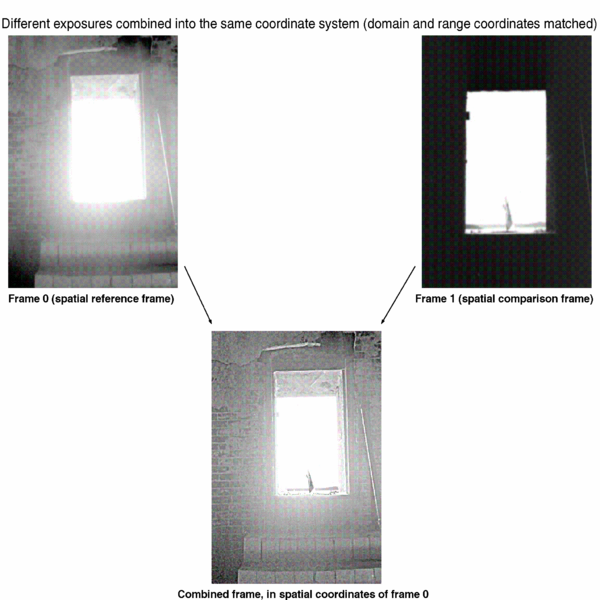

First simultaneous estimation of projection (homography) and gain change, together with composite of multiple differently exposed pictures to generate a high dynamic range image, 1991:

(Published in a 1993 paper, which was the precursor to the 1995 paper entitled "Being Undigital".)

File formats for computational photography

There are two main file formats for computational photography: one is based on an extension of the PPM (Portable Pixmap), and the other is based on an extension of JPEG.

PDM and PLM

Most computational photography uses double precision arrays, e.g. 64 bits per pixel (64BPP), Portable Double Maps use the headers P7 (greyscale) and P8 (color) in place of P5 (Portable Grey Map) and P6 (Portable Pix Map), respectively. Portable Lightspace Maps also use double precision data, but quantimetrically, usually either linear or logarithmic. PLM headers are P9 (greyscale) or PA (color).

Both PDM and PLM are double precision; the difference is in whether they are in imagespace or lightspace. PDM is in imagespace, whereas PLM is in lightspace (i.e. quantimetric: either linear, logarithmic, or some other format that's specified in the header).

The most commonly represented quantimetric scale is the linear scale.

A typical 640 pixel wide, 480 pixel high color image in PLM format is represented in the following example:

PA # cementinit pork.ppm 1.000000 1.000000 1.000000 -o pork.plm 640 480 255 1 2.200000 v\254^Zm\201^L\202@\372\357]5\224+x@\304\262vN^F^TR@u\252\340\224 T\204@$\341\3\33\332\214\333 {@_\256\262^C`@X@u\252\340\224 T\204@$\341\333\332\214\333{@_\256\ ... (binary data)...Many utilities such as those available in the Comparametric Toolkit (available freesource, from Sourceforge), preserve commands as comments in the header. Like PPM, a "#" sign at the beginning of a line denotes a comment, while still in the ascii text header. The header is ascii text (human readable and easy to work with) and the data is binary. Images in PLM are denoted with the plm file extension, for example, "test.plm".

The JLM file format is a compressed version of the PLM, that generalizes the JPEG algorithm, to do floating point arrays.

See: Steve Mann, Corey Manders, Billal Belmellat, Mohit Kansal and Daniel Chen, "Steps towards 'undigital' intelligent image processing: Real-valued image coding of photoquantimetric pictures into the JLM file format for the compression of Portable Lightspace Maps". Proceedings of the 2004 IEEE International Symposium on Intelligent Signal Processing and Communications Systems (ISPACS 2004), Seoul, Korea, November 18–19, 2004.

Computational photography as an artistic medium

Computational photography, as an art form, has been practiced by capture of differently exposed pictures of the same subject matter, and combining them together. This was the insipiration for the development of the wearable computer in the 1970s and early 1980s.

Historical inspiration

Computational photography was inspired by the work of Charles Wyckoff, and thus computational photography datasets (e.g. differently exposed pictures of the same subject matter that are taken in order to make a single composite image) are sometimes referred to as Wyckoff Sets, in his honor.

Early work in this area (joint estimation of image projection and exposure value) was undertaken by Mann and Candoccia.

Charles Wyckoff devoted much of his life to creating special kinds of 3-layer photographic films that captured different exposures of the same subject matter. A picture of a nuclear explosion, taken on Wyckoff's film, appeared on the cover of Life Magazine and showed the dyamic range from dark outer areas to inner core.

External links

Categories:- Photography by genre

- Digital photography

- Image processing

- Computer art

Wikimedia Foundation. 2010.