- Conditional probability

-

The actual probability of an event A may in many circumstances differ from its original probability, because new information is available, in particular the information that an other event B has occurred. Intuition prescribes that the still possible outcomes, then are restricted to this event B. Hence B then plays the role of the new sample space, and the event A now only occurs if A∩B occurs. The new probability of A, called the conditional probability of A given B has to be calculated as the quotient of the probability of A∩B and the probability of B. [1] This conditional probability is commonly notated as P(A | B). (The two events are separated by a vertical line; this should not be mistaken as "the probability of some event A | B", i.e. the event A OR B.)

Contents

Definition

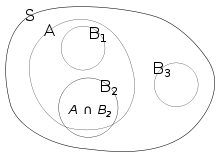

Illustration of conditional probability. S is the sample space, A and Bn are events. Assuming probability is proportional to area, the unconditional probability P(A) ≈ 0.33. However, the conditional probability P(A | B1) = 1, P(A | B2) ≈ 0.85 and P(A | B3) = 0.

Illustration of conditional probability. S is the sample space, A and Bn are events. Assuming probability is proportional to area, the unconditional probability P(A) ≈ 0.33. However, the conditional probability P(A | B1) = 1, P(A | B2) ≈ 0.85 and P(A | B3) = 0.

Conditioning on an event

Given two events A and B in the same probability space with P(B) > 0, the conditional probability of A given B is defined as the quotient of the joint probability of A and B, and the unconditional probability of B:

This may be interpreted using a Venn diagram. For example, in the diagram, if the probability distribution is uniform on S, P(A | B2) is the ratio of the probabilities represented by the areas A∩B2 and B2.

Note: Although the same symbol P is used for both the (original) probability and the derived conditional probability, they are different functions.

Definition with σ-algebra

If P(B) = 0, then the simple definition of P(A | B) is undefined. However, it is possible to define a conditional probability with respect to a σ-algebra of such events (such as those arising from a continuous random variable).

For example, if X and Y are non-degenerate and jointly continuous random variables with density ƒX,Y(x, y) then, if B has positive measure,

The case where B has zero measure can only be dealt with directly in the case that B={y0}, representing a single point, in which case

If A has measure zero then the conditional probability is zero. An indication of why the more general case of zero measure cannot be dealt with in a similar way can be seen by noting that the limit, as all δyi approach zero, of

depends on their relationship as they approach zero. See conditional expectation for more information.

Conditioning on a random variable

Conditioning on an event may be generalized to conditioning on a random variable. Let X be a random variable taking some value from {xn}. Let A be an event. The probability of A given X is defined as

Note that P(A | X) and X are now both random variables. From the law of total probability, the expected value of P(A | X) is equal to the unconditional probability of A.

Example

Consider the rolling of two fair six-sided dice.

- Let A be the value rolled on die 1

- Let B be the value rolled on die 2

- Let An be the event that A = n

- Let Σm be the event that

Initially, suppose we are to roll A and B many times. In what proportion of these rolls would A = 2? Table 1 shows the sample space - all 36 possible combinations. A = 2 in 6 of these. The answer is therefore

. In more compact notation,

. In more compact notation,  .

.Table 1 + B=1 2 3 4 5 6 A=1 2 3 4 5 6 7 2 3 4 5 6 7 8 3 4 5 6 7 8 9 4 5 6 7 8 9 10 5 6 7 8 9 10 11 6 7 8 9 10 11 12 Suppose however we roll the dice many times, but ignore cases in which A + B > 5. In what proportion of the remaining rolls would A = 2? Table 2 shows that

in 10 of the combinations. A = 2 in 3 of these. The answer is therefore

in 10 of the combinations. A = 2 in 3 of these. The answer is therefore  . We say, the probability that A = 2 given that

. We say, the probability that A = 2 given that  , is 0.3. This is a conditional probability, because it has a condition that limits the sample space. In more compact notation, P(A2 | Σ5) = 0.3.

, is 0.3. This is a conditional probability, because it has a condition that limits the sample space. In more compact notation, P(A2 | Σ5) = 0.3.Table 2 + B=1 2 3 4 5 6 A=1 2 3 4 5 6 7 2 3 4 5 6 7 8 3 4 5 6 7 8 9 4 5 6 7 8 9 10 5 6 7 8 9 10 11 6 7 8 9 10 11 12 Statistical independence

If two events A and B are statistically independent, the occurrence of A does not affect the probability of B, and vice versa. That is,

.

.

Using the definition of conditional probability, it follows from either formula that

This is the definition of statistical independence. This form is the preferred definition, as it is symmetrical in A and B, and no values are undefined if P(A) or P(B) is 0.

Common fallacies

Assuming conditional probability is of similar size to its inverse

In general, it cannot be assumed that

. This can be an insidious error, even for those who are highly conversant with statistics.[2] The relationship between P(A | B) and P(B | A) is given by Bayes' theorem:

. This can be an insidious error, even for those who are highly conversant with statistics.[2] The relationship between P(A | B) and P(B | A) is given by Bayes' theorem:That is,

only if

only if  , or equivalently,

, or equivalently,  .

.Assuming marginal and conditional probabilities are of similar size

In general, it cannot be assumed that

. These probabilities are linked through the formula for total probability:

. These probabilities are linked through the formula for total probability: .

.

This fallacy may arise through selection bias.[3] For example, in the context of a medical claim, let SC be the event that sequelae S occurs as a consequence of circumstance C. Let H be the event that an individual seeks medical help. Suppose that in most cases, C does not cause S so P(SC) is low. Suppose also that medical attention is only sought if S has occurred. From experience of patients, a doctor may therefore erroneously conclude that P(SC) is high. The actual probability observed by the doctor is P(SC | H).

Formal Derivation

This section is based on the derivation given in Grinsted and Snell's Introduction to Probability[4].

Let Ω be a sample space with elementary events {ω}. Suppose we are told the event

has occurred. A new probability distribution (denoted by the conditional notation) is to be assigned on {ω} to reflect this. For events in E, It is reasonable to assume that the relative magnitudes of the probabilities will be preserved. For some constant scale factor α, the new distribution will therefore satisfy:

has occurred. A new probability distribution (denoted by the conditional notation) is to be assigned on {ω} to reflect this. For events in E, It is reasonable to assume that the relative magnitudes of the probabilities will be preserved. For some constant scale factor α, the new distribution will therefore satisfy:Substituting 1 and 2 into 3 to select α:

So the new probability distribution is

Now for a general event F,

See also

- Borel–Kolmogorov paradox

- Chain rule (probability)

- Posterior probability

- Conditioning (probability)

- Joint probability distribution

- Conditional probability distribution

- Class membership probabilities

References

- ^ George Casella and Roger L. Berger,(1990) Statistical Inference, Duxbury Press, ISBN 0534119581 (p. 18 et seq.)

- ^ Paulos, J.A. (1988) Innumeracy: Mathematical Illiteracy and its Consequences, Hill and Wang. ISBN 0809074478 (p. 63 et seq.)

- ^ Thomas Bruss, F; Der Wyatt Earp Effekt; Spektrum der Wissenschaft; March 2007

- ^ Grinstead and Snell's Introduction to Probability, p. 134

- F. Thomas Bruss Der Wyatt-Earp-Effekt oder die betörende Macht kleiner Wahrscheinlichkeiten (in German), Spektrum der Wissenschaft (German Edition of Scientific American), Vol 2, 110–113, (2007).

Categories:- Probability theory

- Logical fallacies

- Conditionals

- Statistical ratios

-

Wikimedia Foundation. 2010.

![P(X \in A \mid Y \in \cup_i[y_i,y_i+\delta y_i]) \approxeq

\frac{\sum_{i} \int_{x\in A} f_{X,Y}(x,y_i)\,dx\,\delta y_i}{\sum_{i}\int_{x\in\Omega} f_{X,Y}(x,y_i) \,dx\, \delta y_i} ,](d/2dd41b1964098c5bce4df0a83a9c0ce0.png)