- Constraint learning

-

In constraint satisfaction backtracking algorithms, constraint learning is a technique for improving efficiency. It works by recording new constraints whenever an inconsistency is found. This new constraint may reduce the search space, as future partial evaluations may be found inconsistent without further search. Clause learning is the name of this technique when applied to propositional satisfiability.

Contents

Definition

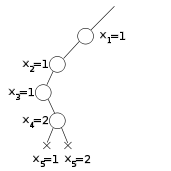

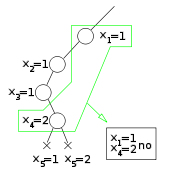

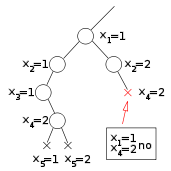

Backtracking algorithms work by choosing an unassigned variable and recursively solve the problems obtained by assigning a value to this variable. Whenever the current partial solution is found inconsistent, the algorithm goes back to the previously assigned variable, as expected by recursion. A constraint learning algorithm differs because it tries to record some information, before backtracking, in form of a new constraint. This can reduce the further search because the subsequent search may encounter another partial solution that is inconsistent with this new constraint. If the algorithm has learned the new constraint, it will backtrack from this solution, while the original backtracking algorithm would do a subsequent search.

If the partial solution

is inconsistent, the problem instance implies the constraint stating that xi = ai cannot be true for all

is inconsistent, the problem instance implies the constraint stating that xi = ai cannot be true for all ![i \in [1,k]](e/42e3f6357368823237648e0d94304f5d.png) at the same time. However, recording this constraint is not useful, as this partial solution will not be encountered again due to the way backtracking proceed.

at the same time. However, recording this constraint is not useful, as this partial solution will not be encountered again due to the way backtracking proceed.On the other hand, if a subset of this evaluation is inconsistent, the corresponding constraint may be useful in the subsequent search, as the same subset of the partial evaluation may occur again in the search. For example, the algorithm may encounter an evaluation extending the subset x2 = a2,x5 = a5,xk − 1 = ak − 1 of the previous partial evaluation. If this subset is inconsistent and the algorithm has stored this fact in form of a constraint, no further search is needed to conclude that the new partial evaluation cannot be extended to form a solution.

Efficiency of constraint learning

The efficiency of constraint learning algorithm is balanced between two factors. On one hand, the more often a recorded constraint is violated, the more often backtracking avoids doing useless search. Small inconsistent subsets of the current partial solution are usually better than large ones, as they correspond to constraints that are easier to violate. On the other hand, finding a small inconsistent subset of the current partial evaluation may require time, and the benefit may not be balanced by the subsequent reduction of the search time.

Size is however not the only feature of learned constraints to take into account. Indeed, a small constraint may be useless in a particular state of the search space because the values that violate it will not be encountered again. A larger constraint whose violating values are more similar to the current partial assignment may be preferred in such cases.

Various constraint learning techniques exist, differing in strictness of recorded constraints and cost of finding them.

Graph-based learning

If the algorithm proves all values of xk + 1 to be inconsistent with

, then this evaluation was consistent, as otherwise the algorithm would not have evaluated xk + 1 at all; as a result, the constraints violated by a value of xk + 1 together with

, then this evaluation was consistent, as otherwise the algorithm would not have evaluated xk + 1 at all; as a result, the constraints violated by a value of xk + 1 together with  all contain xk + 1.

all contain xk + 1.As a result, an inconsistent evaluation is the restriction of the truth evaluation of

to variables that are in a constraint with xk + 1, provided that this constraint contains no unassigned variable.

to variables that are in a constraint with xk + 1, provided that this constraint contains no unassigned variable.Learning constraints representing these partial evaluation is called graph-based learning. It uses the same rationale of graph-based backjumping. These methods are called "graph-based" because they are based on pairs of variables are in the same constraint, which can be found out from the graph associated to the constraint satisfaction problem.

Jumpback learning

Jumpback learning is based on storing as constraints the inconsistent assignments that would be found by conflict-based backjumping. Whenever a partial assignment is found inconsistent, this algorithm selects the violated constraint that is minimal according to an ordering based on the order of instantiation of variables. The evaluation restricted of the variables that are in this constraint is inconsistent and is usually shorter than the complete evaluation. Jumpback learning stores this fact as a new constraint.

The ordering on constraints is based on the order of assignment of variable. In particular, the least of two constraint is the one whose latest non-common variable has been instantiated first. When an inconsistent assignment is reached, jumpback learning selects the violated constraint that is minimal according to this ordering, and restricts the current assignment to its variables. The constraint expressing the inconsistency of this assignment is stored.

Constraint maintenance

Constraint learning algorithms differ not only on the choice of constraint corresponding to a given inconsistent partial evaluation, but also on the choice of which constraint they maintain and which ones they discard.

In general, learning all inconsistencies in form of constraints and keeping them indefinitedly may exhaust the available memory and increase the cost of checking consistency of partial evaluations. These problems can be solved either by storing only some learned constraints or by occasionally discarding constraints.

Bounded learning only stores constraints if the inconsistent partial evaluation they represent is smaller than a given constrant number. Relevance-bounded learning discards constraints (or does not store them at all) that are considered not relevant given the current point of the search space; in particular, it discards or does not store all constraints that represent inconsistent partial evaluations that differ from the current partial evaluation on no more than a given fixed number of variables.

See also

References

- Dechter, Rina (2003). Constraint Processing. Morgan Kaufmann. http://www.ics.uci.edu/~dechter/books/index.html. ISBN 1-55860-890-7

Categories:

Wikimedia Foundation. 2010.